Pairing Sensing with AI for Efficient ADAS

Sensor and sensing capabilities are common and expanding in modern vehicles. One of the major motivators for this is safety or, more specifically, Euro NCAP Vision Zero guidelines. These are driving automakers’ and Tier 1 suppliers’ ADAS/AV roadmaps, requiring more powerful scene understanding. Among other things, extending the scope of VRU (vulnerable road users) protection is driving up sensor variety, resolution and quality and, as a result, poses increasingly high vision processing requirements. Addressing this need for higher processing throughput (within the power and space limitations of the vehicle) is now made possible by novel highly power efficient AI processors.

The Forward-Facing Camera

At the basic SAE Level 2, let’s look at the single-sensor forward-facing-camera (FFC). The capability of an intelligent FFCs to offer VRU protection relies on its sensor and the AI processing capabilities. It is a vehicle safety requirement to have a high-resolution sensor to be able to capture even smaller objects like pedestrians, animals and road signs, while in motion. The high resolution is also a prerequisite for safety at higher driving speeds, which dictate an extended braking distance.

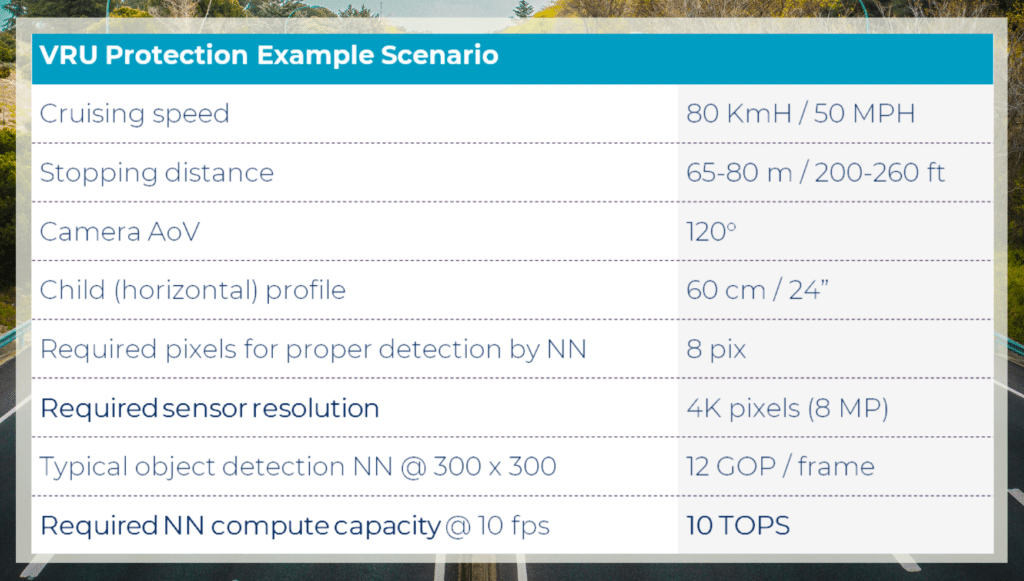

High resolutions demand more from the camera’s AI processing. To put this in more practical terms: a car travelling at 80 km/h (or 50 MPH) needs to be able to detect a child crossing the road. Let’s assume the child’s horizontal profile is 60 cm (24 inches). A typical object detection neural network will require at least 8 pixels for proper detection and identification of the child. Also, the car will require a safe stopping distance of 65-80 m (200-260 ft) at that speed (which dictates the distance at which the child will need to be detected). This requires a 4K camera (8 MP resolution). At 12 GOPS/frame (giga operations per frame of video) with each frame being 300×300 pixels (a typical neural network processing size) and assuming a 10fps input, this means the task would require 10 TOPS of AI processing performance.

Limiting the system to a lower resolution would make the stopping distance shorter, and the vehicle will not be able to handle highway speeds safely. If you would like the ADAS/AV to support real-world highway speeds, sensor resolution and required processing throughput will have to increase.

Moreover, real world driving scenarios are most often a lot more complex than the one child crossing the street. Driving in urban environments, through crossroads or on congested freeways, requires camera systems to cope with more complex scenes. The processing workloads here are more demanding due to the multiplicity of objects, both stationary and moving in different directions, that need to be detected and identified. This, of course, requires higher performance while maintaining the same low latency.

Another requirement might be the need for the intelligent camera to run more than one task (or multiple neural networks) at once. Common functions that may be added are lane detection for lane-keeping assistance or semantic segmentation, which helps the car recognize the drivable space.

The Multi-Sensor Systems

As we progress beyond L2 ADAS, the demand for processing performance gets even steeper. Moving towards greater autonomy takes us into more complex multi-sensor and multi-modal systems.

Most carmakers and automotive AI developers today recognize that the camera has its shortfalls and needs to be supported by other types of sensors to ensure the ADAS/AV systems’ basic reliability, that is to make a system that is safe under all possible road conditions. As the camera’s field of view is obscured by low light, fog, heavy rain, or dust, a Radar or LiDAR can be used (4,5). The latter is a technology that is making its way into mass production, while the former is used in L2/L2+ systems already on the market.

AI processing of Radar signals has enabled object detection based on radar image alone (1,2). However, it is a more accurate, reliable, and robust VRU protection solution when Radar is used alongside high-resolution camera/s. That is, a Radar is added to the forward-facing system for an all-weather and nighttime object detection capability. On the processing level, this adds a new data pipeline and requires additional AI processing capacity. This would double the processing requirement, roughly speaking, in our VRU example.

The additional Radar is not just a failsafe for when the camera fails. It also enriches sensing, as we are now working with multiple modalities that ensure wider coverage for corner cases (i.e. fog, occlusion). Sensor fusion brings divergent types of data into one single pipeline where data is compared and cross-referenced, resulting in a single output for the vehicle to act on. Both cameras and Radar have strengths and shortfalls. Interoperation allows the system to harness the strengths of each, creating more reliable and/or accurate perception results. Euro NCAP roadmap 2025 notes as much about evolving AEB systems: “More and more manufacturers are adding additional sensors and combining multiple sensor types together in “fusion” to offer the potential to address new and more complex crash scenarios” (3). Needless to say, this more reliable sensor system comes with higher and more complex compute demands.

The Importance of Power Efficiency

Given the large and complex AI tasks described above, it is not surprising that higher-performing AI processors are at the heart of the assisted and autonomous driving revolution. As EE Times’ Egil Juliussen put it in his recent article:

“In general, the more complex the problem, the more complex the neural network model must be. That implies large models… Large models, especially for AVs, require tremendous computing resources to crunch sensor data and support complex software. Those resources also require power, which is always limited in auto applications” (6).

The main issue with high performance is heat dissipation. All those computers you have loaded into the car produce large amounts of heat that need to go somewhere, else they will cause system failure. Every thermal design and cooling method has its limits – there is only so much heat it is possible to dissipate. Piling on cooling solutions increases the weight of the vehicle, which impedes the range that it can drive before refueling or recharging (the size and capacity of energy storage is limited in a similar way).

The more computing throughput you can get for every Watt of power consumed, the better. Traditional processing architectures like CPUs and GPUs have not provided good enough power efficiency which became an inhibitor to increasing in-vehicle compute. This is a barrier that is being broken down by the new generation of AI processing technology. Dedicated AI processors provide the high-power efficiency (high processing throughput per unit of power) that allows to pack more AI processing into the vehicle, making the more compute-intense higher levels of autonomy possible.

To learn more about power efficiency in AI processing read our white paper

What About Software?

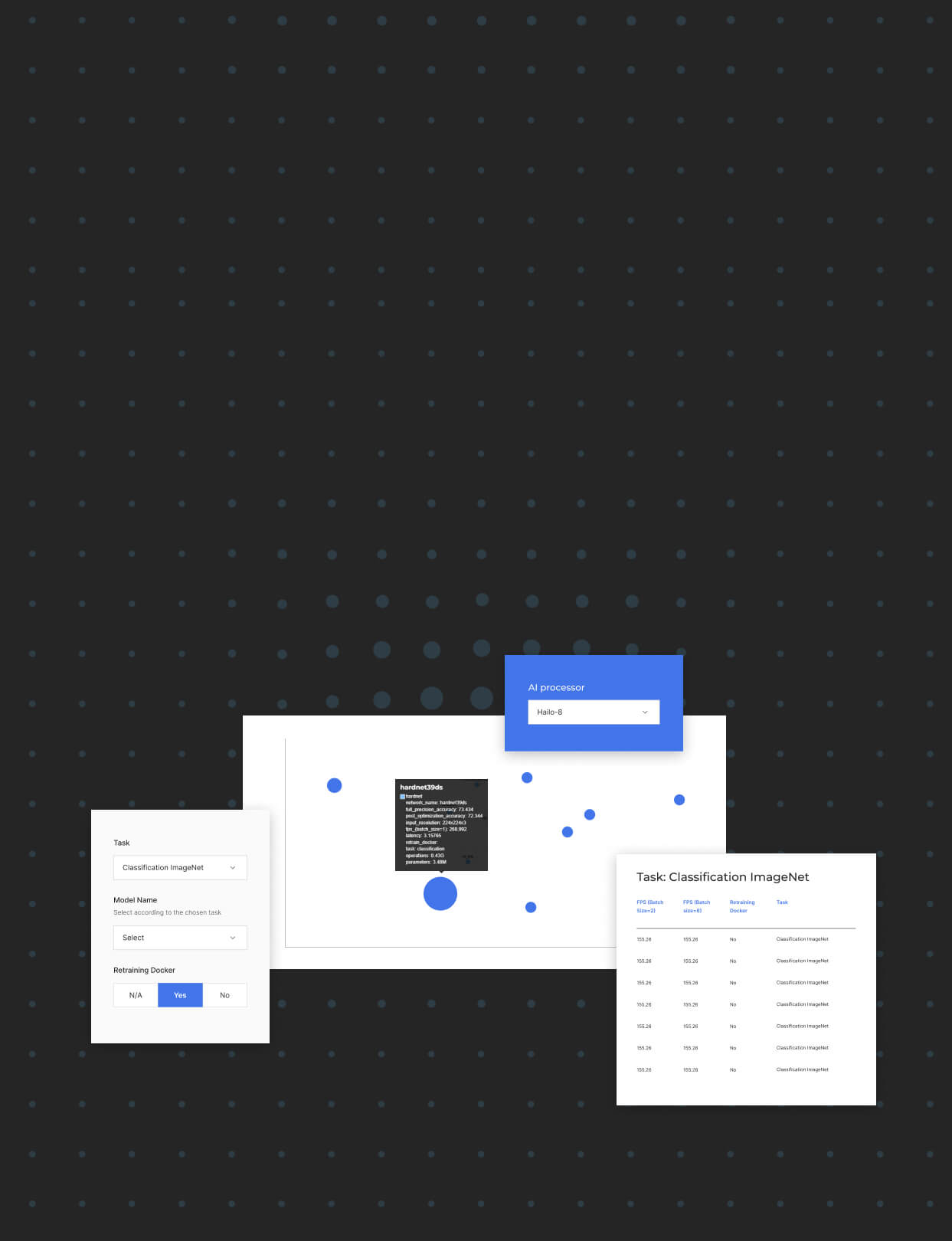

Dedicated efficient hardware is just part of the equation. Today, there is a focus on software-defined architectures and processing hardware is tightly coupled with its software. Considering the complexity of intelligent automotive systems and the level of safety regulations, it is more important than ever that automotive OEMs developing the high-level ADAS software be enabled with dynamic, flexible and scalable hardware and (abstraction-level) software to build upon. There is no room for closed gardens and black boxes when what we want is software-defined vehicles that continue to evolve in customers’ hands via OTA (over-the-air) updates. How else will automakers be able to keep up with evolving AI technology and research? They need to be able to update and expand to make sure they are always using state-of-the-art Neural Network models and to pack the functionality (i.e multiple neural tasks and applications) that is becoming available into their systems.

To learn more about the Hailo-8 AI processor for ADAS/AV applications visit:

Sources:

(1) https://www.iss.uni-stuttgart.de/forschung/publikationen/kpatel_RadarClassification.pdf

(2) https://www.greencarcongress.com/2020/06/20200629-princeton.html

(4) https://www.eetimes.com/demystifying-lidar-an-in-depth-guide-to-the-great-wavelength-debate/

(5) https://www.eetimes.com/radar-safety-warning-dont-cross-the-streams/

Read mode about what we do

Hailo offers breakthrough AI accelerators and Vision processors

Scaling Up Video Management Systems

Backing into the Future: Unlocking the Potential of Automated Parking

Leveraging Vendor Partnerships for ADAS Success: LeddarTech and Hailo

Don’t miss out

Sign up to our newsletter to stay up to date