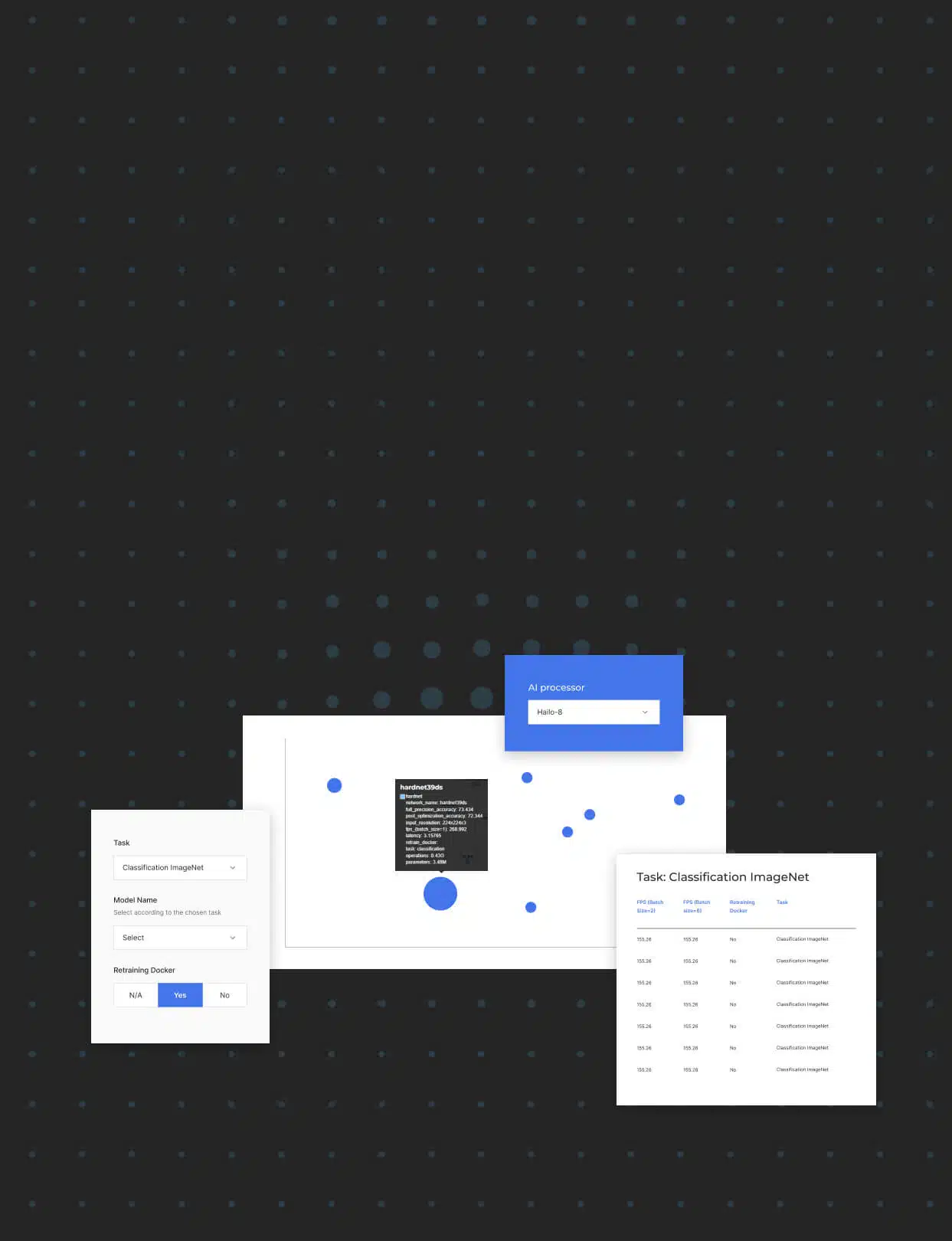

- ProductsAI AcceleratorsRecommended blog post

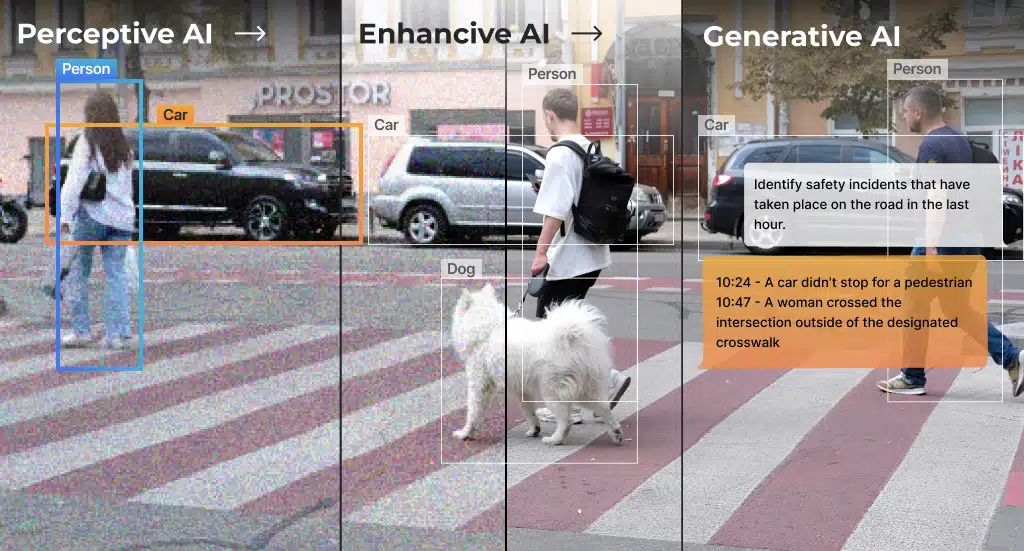

AI Vision ProcessorsRecommended blog post

AI Vision ProcessorsRecommended blog post

- ApplicationsAutomotiveSecurityIndustrial AutomationRetailPersonal ComputeAutomotiveRecommended blog postsSecurityDownload our e-Books

Industrial AutomationCustomer story

Industrial AutomationCustomer story RetailCustomer story

RetailCustomer story Personal ComputeRecommended blog posts

Personal ComputeRecommended blog posts

- Resources

- Company