Our 1st Hackathon: One Day to Develop a Winning AI App with the Hailo TAPPAS Toolkit

Introducing TAPPAS

The MAD team is Hailo’s first and friendliest customer. We develop deep-learning based demos using the Hailo-8 AI Processor and accompanying software toolchain. Our demos encompass a variety of use-cases and industries including Automotive, Smart City, Smart Factory and more. Our goal is to showcase Hailo’s capabilities and enable easy and rapid prototyping of deep learning applications at the edge.

To that end, we deliver a framework called TAPPAS. TAPPAS includes bite-sized applications and tools, delivered to our customers to use and build upon so that they can easily build applications to suit their needs. We developed TAPPAS with the goal to allow easy ramp up of solutions based on Hailo’s platform, so it includes pre-made demos and enables fast integration of user’s networks.

Working with a high-performance dataflow AI accelerator like the Hailo-8 – and thus with high frame rates and high resolutions – requires a solid framework for video processing pipelines. The TAPPAS frameworks is meant to take care of these tasks, so the user can focus on their core technology. For example, a robotics company should focus its time on building navigation algorithms not on how to pass the video input into the AI engine efficiently. The TAPPAS framework provides ready-made templates for standard applications that include functionalities like display and data pipelining, video capture and video manipulation.

Why a Hackathon?

As part of our continuous investment in building the TAPPAS framework, we are looking for new applications ideas – real-world use cases and pain points that call for edge AI solutions. Hailo’s technology brings game-changing AI capabilities to edge devices, enabling solutions that were impossible just a few years ago. The potential applications and products are extensive, so we took this opportunity to let our talented engineers and developers explore them. With the Hailo-8 AI processor coming into wide availability, we also wanted to test the ease of use of our framework. A hackathon is a terrific way to both ideate and evaluate usage, as well as support employee engagement, especially after a long period of working from home due to Covid-19 restrictions.

The Hailo AI Applications Hackathon

The Hackathon took place at Hailo headquarters. Around half of the company’s employees participated and brought their ideas to life using Hailo’s platform. For 24 hours the teams built their projects and had tons of fun.

Presenting the Hackathon winners:

AI Video Conferencing utilities, integrated in your favorite web-communication application, including a deep-fake-like face swapper, a cartoon-style transfer, and background face anonymizer.

1st place: AI-based video conferencing plugin

The app’s architecture includes 4 main video-processing components:

- Face detection NN

- Landmark extraction NN

- Face Swap

- Style Transfer NN

All the networks were ported to the Hailo-8 AI processor for the Hackathon and combined into a single application-flow with the TAPPAS platform. This project presented 3 new networks running in parallel on one Hailo-8 processor and exported the application output as a “virtual camera” available to use from the video conferencing application. The face swap is making use of landmarks extraction to map an input face to a target face. The advantage of this method is that you do not need to retrain a network to switch the input face – it is an input to the application and can is extracted directly from the input stream.

This application is especially relevant in current times, with remote work being as widespread as it is. Current Video Conferencing platforms already have some AI features, but these are resource-consuming and limited to strong platforms. Running this kind of application on the Hailo-8 device allows real-time performance that does not drain the host processor and system resources. We expect to integrate some of these new networks and tools into the TAPPAS framework soon.

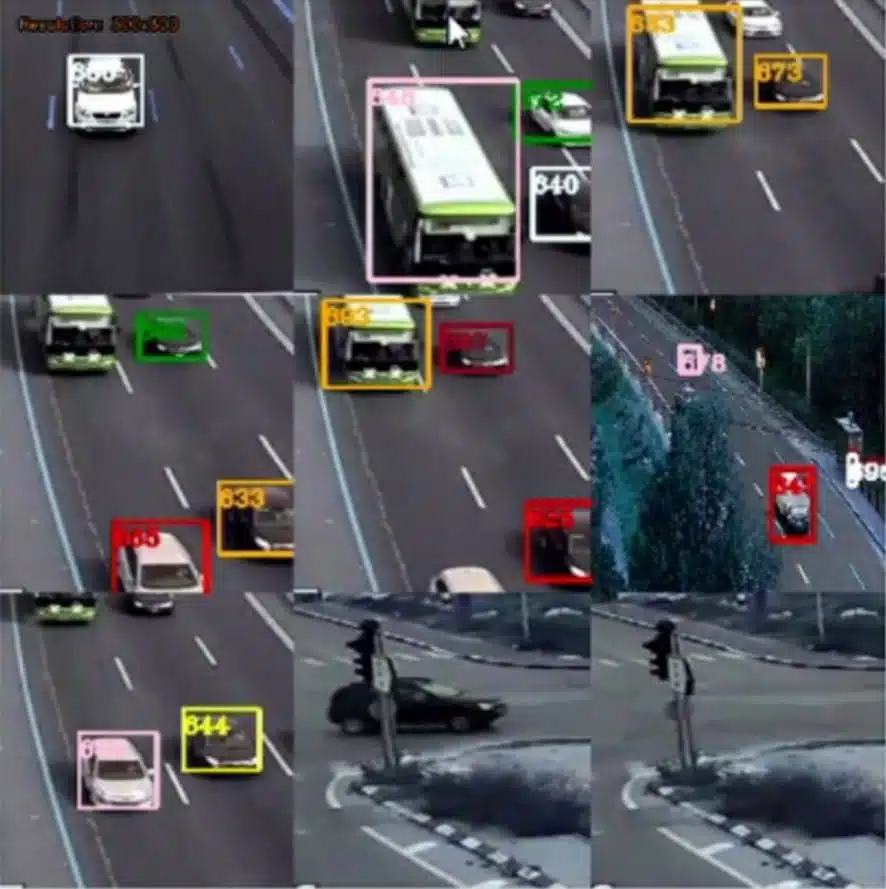

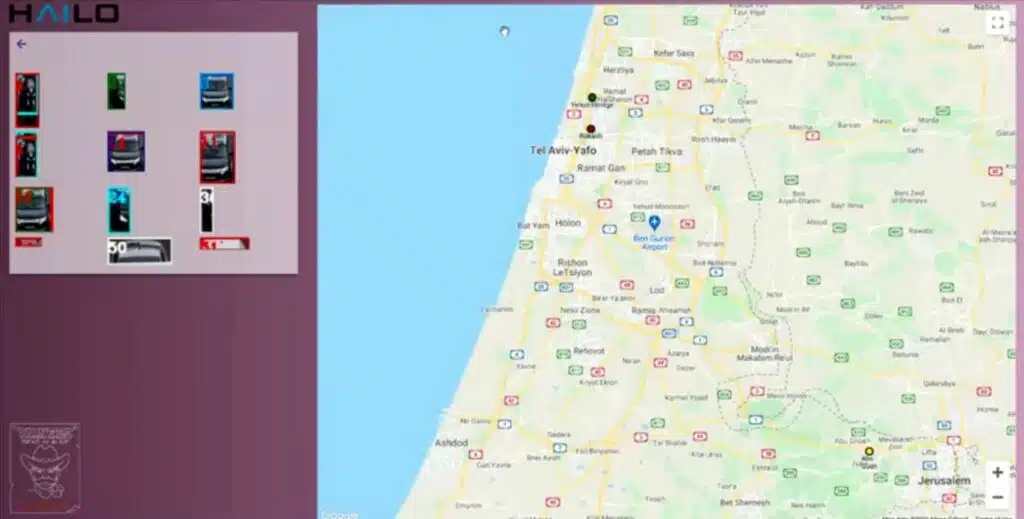

2nd place: road traffic analyzer

The team used a network pre-trained on the VisDrone dataset along with a TAPPAS multi-stream application as a starting point. The network’s inputs were live video streams from online traffic cameras and its outputs were used to calculate driving speed, traffic congestion and more. These functionalities were wrapped up nicely with GUIs, database integration, and web hosting, achieving full integration in just 24 hours – amazing!

Analyzing data from live cameras for traffic monitoring serves Smart City, Public Safety, and some commercial applications. It seems that cameras are popping up on every street. In current solutions, the video is mostly low resolution and is streamed to the cloud for viewing and storage, which is why it is most often used for after-the-fact investigation and not for real-time alerts or prevention. Conversely, using the Hailo-8 in an edge device (like a camera, or Edge AI Box), allows to run inference locally and in real time, thereby enabling real-time alerts and actions. Another benefit is you do not need to transmit and store large amounts of data, just the ones that trigger an action. For example, the license plate number and few seconds of video of a car running a red light or the vehicle count on a given stretch of road for a given period for congestion monitoring.

You can read more about these kinds of use cases in our Revolutionizing AI Applications at the Edge white paper, as well as in the blog: “How the Edge AI Box Can Accelerate Adoption of AI for Surveillance and Security”.

3rd place: “Plailo” video game gesture control

Everybody likes video games. We wanted to be able to maximize our experience and play with friends. Building upon the TAPPAS CenterPose app, we created a tool to convert body poses and gestures into keystrokes, which allowed us to use a webcam to detect player movements in real time and convert them to game controls. As the player jumps, ducks, and moves from side to side, so does the avatar on screen.

The simple tool we created allows quick integration to any available application. No need to recompile or modify the application – anything controlled by keyboard or mouse can be controlled with body movements. The hardware required for this is a simple web cam and the Hailo-8 processor (we used our M.2 AI Acceleration module plugged into a laptop).

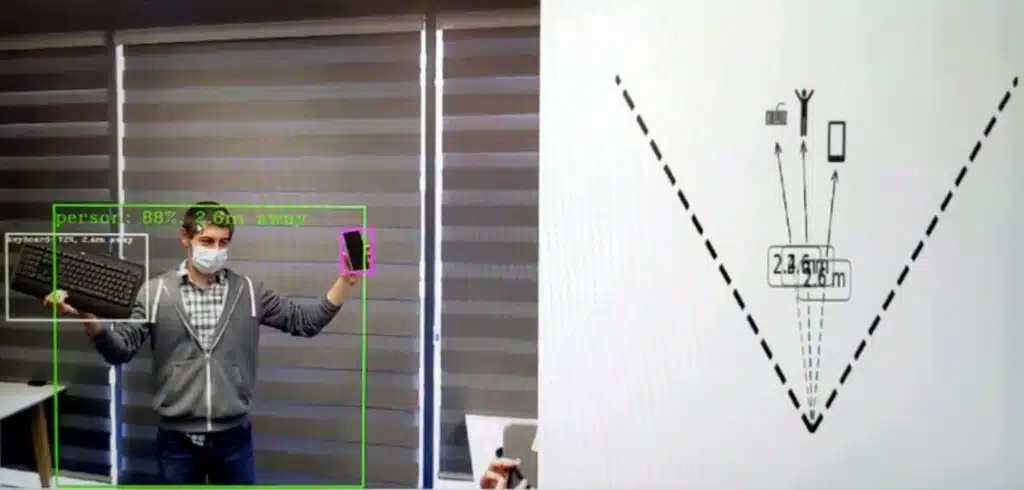

Runner up: Deep-X – a wearable seeing aid

The Hailo-8 AI processor is small, low-power and high-efficiency and is thus well suitable for intelligent wearables, especially ones like Deep-X that require high processing capabilities. The project created a prototype for a wearable aid for the visually impaired. The device detects objects and estimates their distance from it, supporting navigation verbally.

The solution uses 2 stereo cameras running a state-of-the-art detection network (Yolo V5), instead of a depth estimation network on a single camera, which enables depth estimation via triangulation. The distance calculation process is selective – it is performed only on objects deemed “relevant” by the object detector. This frees up compute capacity and, among other things, allows to run state-of-the-art (i.e. highly accurate) object detection models under power and form constraints at the edge. This is an interesting concept that can be replicated in other applications where depth or spatial positioning is required only for specific objects.

Note that the solution does not require co-linear stereo cameras. Any 2 or more cameras in different positions can be used to triangulate the location of the detected objects.