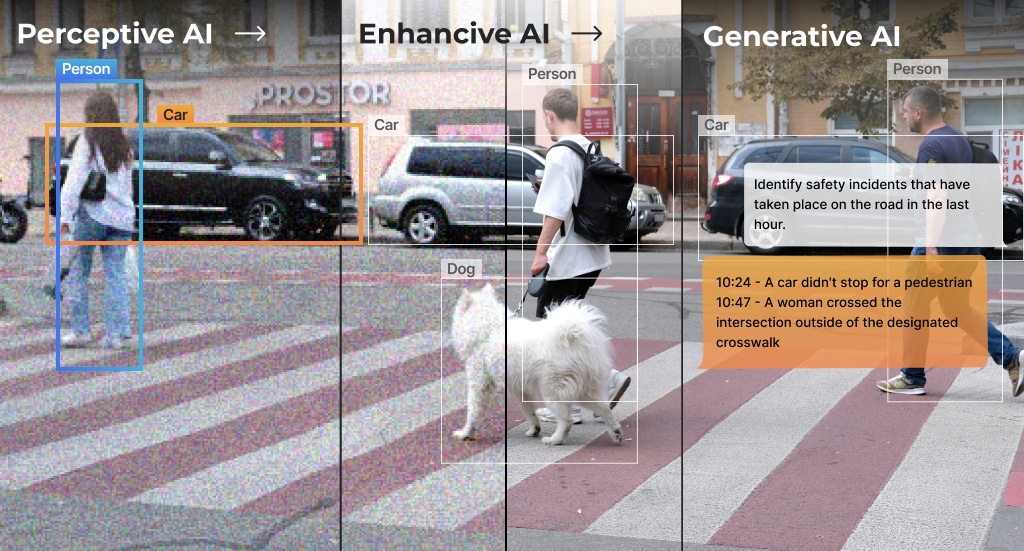

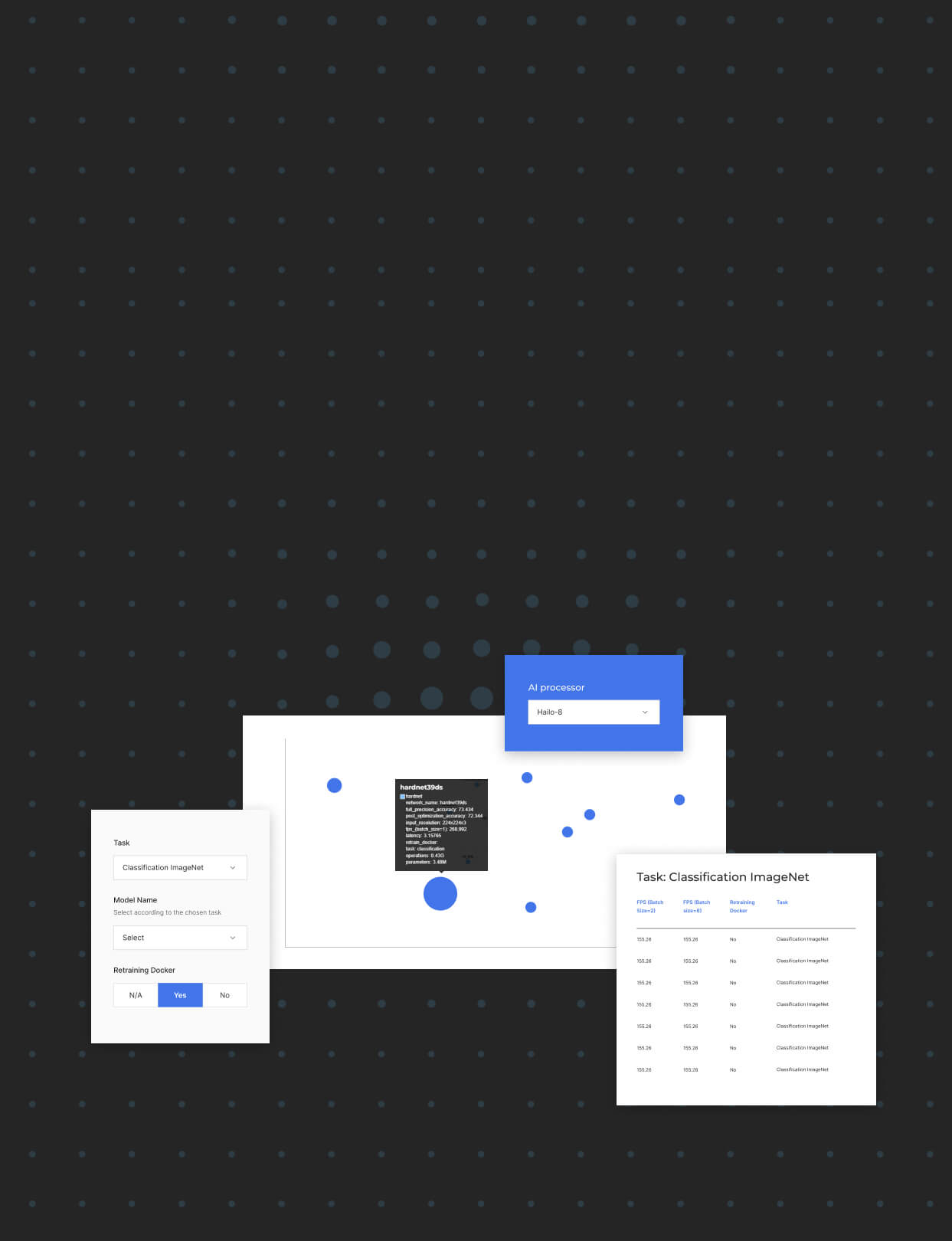

Detection apps with several available neural networks, delivering unique functionalities and supporting multiple streams

Multi-Stream object detection is utilized in diverse applications across different industries, including complex ones like Smart City traffic management and Intelligent Transportation Systems (ITS). You can either use your own object detection network or rely on pre-built models like YOLOv5m, which are all trained on the COCO dataset. Notably, these models offer unique capabilities such as Tiling, which utilizes Hailo-8’s high throughput to handle high-resolution images (FHD, 4K) by dividing them into smaller tiles. Processing high-resolution images proves particularly useful in crowded locations and public safety applications where small objects are abound, for instance, in crowd analytics for Retail and Smart Cities, among other use cases.