概述

Hailo设备配有全面的人工智能软件套件,可实现深度学习模型编译,并在生产环境中实施人工智能应用。模型构建环境与常见的机器学习框架无缝集成,从而在现有的开发生态系统中顺利且轻松地进行集成。运行时环境支持在诸如基于x86和ARM主处理器(使用Hailo-8™)的产品中和Hailo-15™视觉处理器中集成和部署

Hailo设备配有全面的人工智能软件套件,可实现深度学习模型编译,并在生产环境中实施人工智能应用。模型构建环境与常见的机器学习框架无缝集成,从而在现有的开发生态系统中顺利且轻松地进行集成。运行时环境支持在诸如基于x86和ARM主处理器(使用Hailo-8™)的产品中和Hailo-15™视觉处理器中集成和部署

Model Zoo, TensorFlow和ONNX中各种常见的先进预训练模型和任务.

数据流编译器, 用于为Hailo设备离线编译和优化用户模型

TAPPAS, 一套完整的应用示例,实施管道流水线元素和预训练的人工智能任务

HailoRT 生产级轻型运行时软件包, 在主机处理器上运行,用于对数据流编译器编译的深度学习模型进行实时推理

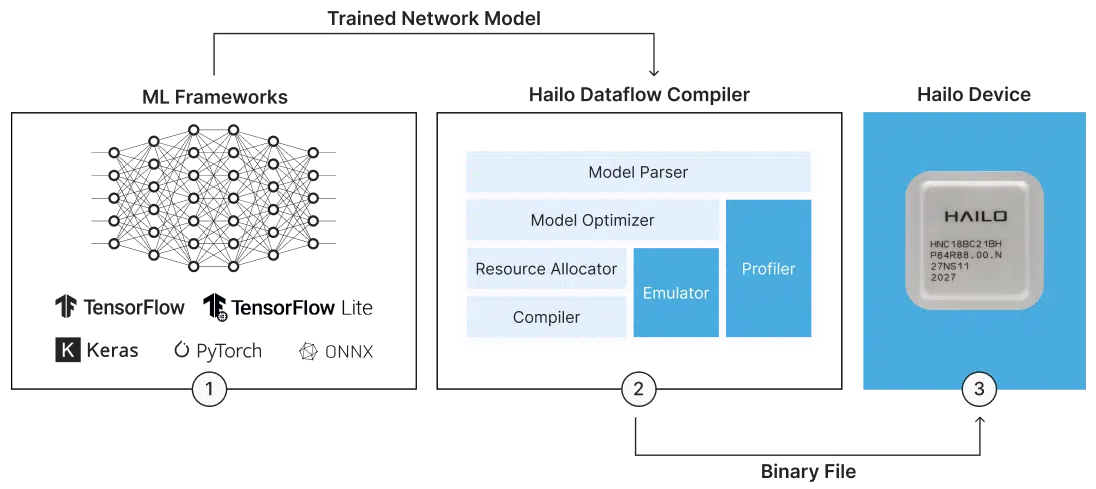

Hailo 设备配有全面的AI SDK 工具链,可与现有的深度学习开发框架无缝集成,以便轻松地集成到现有的开发生态系统中。

将模型从行业标准架构转译为Hailo格式

通过最先进的量化算法将数字信息转译为内部表征

以硬件资源分配的方式将用户网络所需的资源分配到 Hailo 设备中的物理资源

通过专门的深度学习编译器将模型编译成 Hailo 二进制文件

在指定Hailo设备上加载二进制文件并运行推理功能

深度学习工具包SDK 既支持直接访问以及设备进行独立推理,也可轻松集成于现有环境中结合Tensorflow系统进行推理

模拟器可对芯片表现进行精确仿真

分析器提供芯片性能估算(例如 FPS、功率和延迟)

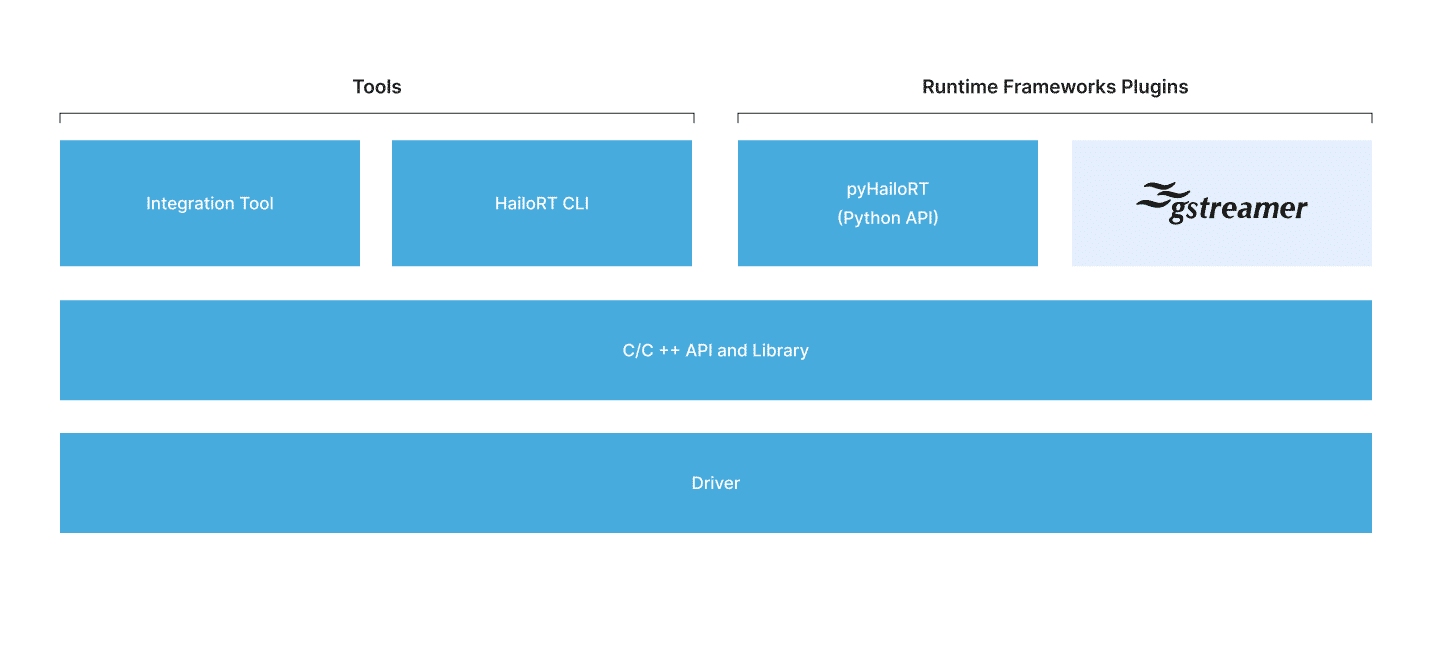

HailoRT是一款在主机处理器上运行的生产级、轻型、可扩展运行时软件,用于在使用Hailo设备的生产平台上进行实时推理。它使开发人员能够为人工智能应用构建简单而快速的管道,适用于评估、原型和生产应用。HailoRT可以使用一个或多个Hailo设备实现高吞吐量推理,并通过Hailo Github作为开源软件提供。

多主机架构支持 – 支持x86和ARM架构

人工智能应用的灵活接口 – C/C++和Python接口

与设备和管道的轻松集成 – 包括GStreamer框架的插件

支持多视频流 – 同时处理多个视频流

支持最多16个Hailo-8TM设备的高吞吐量推理

无缝的Hailo设备控制 – 与Hailo-8TM设备的双向控制和数据通信接口

集成工具 – Hailo-8TM M.2和Hailo-8TM mPCIe模块硬件集成的验证工具

命令行应用,用于控制Hailo设备、在设备上运行推理以及收集推理统计数据和设备事件

用户空间,运行时,健壮的C/C++ API,用于控制和与Hailo设备之间的数据传输

可实现Hailo软件到Yocto环境(Zeus、Dunfell、Hardknott、Gatesgarth)的集成

包括HailoRT库、pyHailoRT和PCIe驱动程序的方法

The Hailo Model Zoo provides deep learning models for various computer vision tasks. The pre-trained models can be used to create fast prototypes on Hailo devices.

Additionally, the Hailo Model Zoo Github repository provides users with the capability to quickly and easily reproduce the Hailo-8’s published performance on the common models and architectures included in our Model Zoo.

A variety of common and state-of-the-art pre-trained models and tasks in TensorFlow and ONNX

Model details, including full precision accuracy vs. quantized model accuracy measured on Hailo-8

Each model also includes a binary HEF file that is fully supported in the Hailo toolchain and Application suite (for registered users only)

The Hailo Model Explorer is a dynamic tool designed to help users explore the models on the Model Zoo and select the best NN models for their AI applications.

The Model Zoo gives users the capability to quickly and easily reproduce the Hailo published performance on the common models and architectures included in our Model Zoo and retrain these models. The collection encompasses both common and state-of-the-art models available in TensorFlow and ONNX formats.

The pre-trained models can be used for rapidly prototyping on Hailo devices and each model is accompanied by a binary HEF file, fully supported within the Hailo toolchain and Application suite (accessible to registered users only).

Selecting an appropriate model to use in your application can be challenging due to various factors like inference speed, model availability, desired accuracy, licensing, and more. Inference speed is unique since it cannot be easily estimated without the underlying hardware used.

Unfortunately, no single intrinsic model attribute (e.g., FLOPS, parameters, size of activation maps. etc.) is a reliable predictor for inference speed and, to complicate things further, different hardware architectures have different optimal workloads. While it is possible to measure the inference time for each model, it can be tedious and time consuming.

The Model Explorer below was designed to helps users in making better-informed decisions, ensuring maximum efficiency on the Hailo platform. The Model Explorer offers an interactive interface with filters based on Hailo device, tasks, model, FPS, and accuracy, allowing users to explore numerous NN models from Hailo’s vast library.

Read more about how the Hailo Model Zoo works and what it can do on the Hailo Blog.

Hailo has developed TAPPAS to streamline the development and deployment of edge applications that require high AI performance. This reference applications software package helps users accelerate their time-to-market by reducing the amount of time and effort spent on development. TAPPAS includes a user-friendly GStreamer-based set of fully functional application examples that incorporate pipeline elements and pre-trained AI tasks. These examples are built on top of advanced Deep Neural Networks that showcase the best-in-class throughput and power efficiency of Hailo’s AI processors. In addition, TAPPAS illustrates how Hailo’s system integration works by showcasing specific use cases on predefined software and hardware platforms. By utilizing TAPPAS, you can simplify integration with Hailo’s runtime software stack and have a starting point to fine-tune your applications.

Detecting and classifying objects within an image is a crucial task in computer vision, known as object detection. Deep learning models trained on the COCO dataset, which is a popular dataset for object detection, offer varying tradeoffs between performance and accuracy. For instance, by running inference on Hailo-8, the YOLOv5m model achieves 218 FPS and 42.46mAP accuracy, while the SSD-MobileNet-v1 model attains 1055 FPS and 23.17mAP accuracy. The COCO dataset includes 80 unique classes of objects for general usage scenarios, including both indoor and outdoor scenes.

License Plate Recognition (LPR) pipeline, also referred to as Automatic Number Plate Recognition (ANPR), is commonly used in the Intelligent Transportation Systems (ITS) market. This example application demonstrates an automatic model switching between 3 different networks in a complex pipeline. Running in parallel YOLOv5m model for vehicle detection, YOLOv4-tiny model for detecting license plate and lprnet model for text extraction.

Read more in our blog post

Multi-Stream object detection is utilized in diverse applications across different industries, including complex ones like Smart City traffic management and Intelligent Transportation Systems (ITS). You can either use your own object detection network or rely on pre-built models like YOLOv5m, which are all trained on the COCO dataset. Notably, these models offer unique capabilities such as Tiling, which utilizes Hailo-8’s high throughput to handle high-resolution images (FHD, 4K) by dividing them into smaller tiles. Processing high-resolution images proves particularly useful in crowded locations and public safety applications where small objects are abound, for instance, in crowd analytics for Retail and Smart Cities, among other use cases.

Multi-person re-identification across different streams is essential for security and retail applications. This includes the identification of a specific person multiple times, either in a specific location over time, or along a trail between multiple locations. This example application demonstrates NN model switching, of both the YOLOv5s and repvgg_a0_person_reid deep learning models, trained on Hailo’s dataset, in a complex pipeline with inference-based decision-making. This is achieved using the model scheduler, an automatic tool for model switching, which enables processing multiple models simultaneously at runtime.

Semantic segmentation aims to assign a specific class to each pixel within the input image, and recognize a collection of pixels that form distinct categories. This technique is commonly used for ADAS applications, to enable the vehicle to decide where the road, sidewalk, other vehicles and pedestrians are. It also enhances the detection of defects in quality control through optical inspection applications in industrial automation and enhances the precision of detail detection in medical imaging cameras, retail cameras and more. In this specific setup, the pipeline relies on the Cityscapes dataset, which contains images captured from the perspective of a vehicle’s front-facing camera, encompassing 19 distinct classes. The pre-configured TAPPAS semantic segmentation pipeline showcases the robust computational capacity necessary for handling an FHD input video stream (1080p) while employing the FCN8-ResNet-v1-18 network.

Depth estimation from a single image is achieved by the ability to estimate the depth or distance information from 2D images and turn it into a 3D mapping. It enables automotive cameras to better understand the distance to objects, helps industrial inspection cameras in tasks like defect detection and quality control and can improve the accuracy of person detection for security cameras by proving more detailed spatial information.

In this example, we are using the fast_depth deep learning model, trained on NYUv2 dataset, which predicts a distance matrix (different depth for each pixel) with the same shape of the input frame.

Instance segmentation task is the process of merging the capabilities of object detection (which includes identifying and categorizing objects) and semantic segmentation (which allocates specific classes to individual pixels) to produce a distinct mask for each object within a given scene. This task becomes especially crucial when bounding boxes lack precision for localization, and when the application requires pixel-level differentiation between objects. This application utilizes either the yolov5seg or YOLACT architectures, and it entails the training of these models using the COCO dataset.

Pose estimation is a computer vision technology that detects and tracks human body poses in images or videos. From recognizing emergency situations at home or on the factory floor, to analyzing customer behavior for better business outcomes. It involves localizing the different parts of the human body such as the head, shoulders, arms, legs, and torso, and estimating their positions and orientations in a 3D space. This pipeline includes a combination of centerpose models pre-trained on COCO dataset.

Facial detection is a common task of utilizing object detection network for a specific object of faces. The face detection network was trained using the WIDER dataset and its output is the boxes prediction of all the faces in the frame. This application demonstrates how to crop the Region of Interest (ROI) produced by the detector and feed a second network to predict facial landmarks for each predicted face. Facial landmarks are important features in analyzing the face orientation, structure and so on.

To enhance the processing power of Hailo devices for handling large input resolutions, we can divide an input frame into multiple tiles and run an object detector on each tile individually. For instance, consider an object that occupies 10×10 pixels in a 4K input frame. This object will only contain 1 pixel of information for a 640×640 detector, such as YOLOv5m, making it nearly impossible to detect. To address this challenge, we use tiles to divide the input frame into smaller patches and detect the object in each tile without sacrificing information due to resizing. The tiles are identified by blue rectangles, and we utilize a pre-trained SSD-MobileNet-v1 model trained on the VisDrone dataset.