How an Open Model Zoo Can Boost Your Edge AI System Development

Edge AI system development has been propelled forward by the exciting emergence of new purpose-built processing architectures that empower us to do so much more on the edge. An important, inseparable part of an edge AI offering is the dedicated software that enables the hardware. Of course, every new chip comes with its own software stack and development environment, but the extent those enable developers differ.

In order to truly support developers, AI software needs not only to be robust but to give the developer flexibility and speed up Time-to-Market with an envelope of developer tools. An open AI Model Zoo is a great resource for developers, and a key part of this envelope.

What is a Model Zoo?

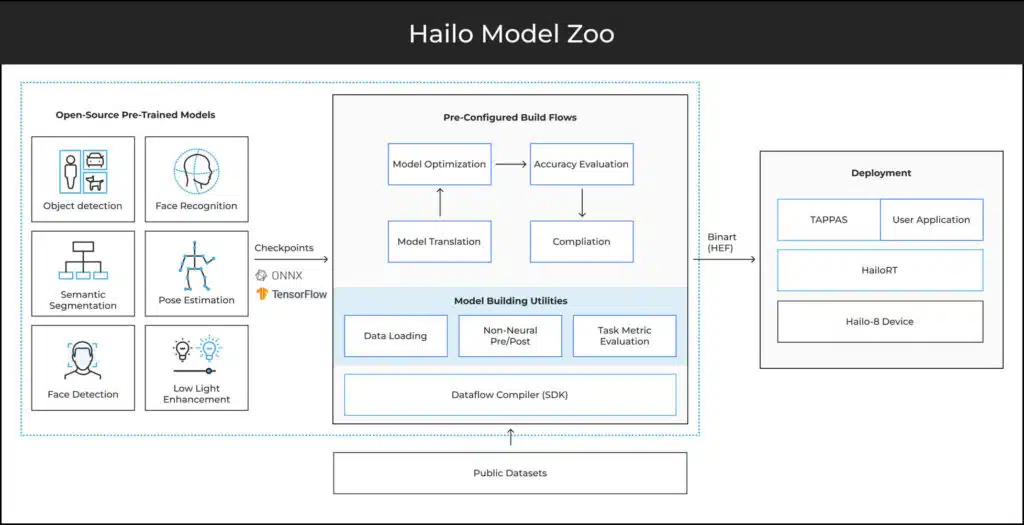

The Model Zoo is a repository which includes a wide range of pre-trained, pre-compiled deep learning NN models and tasks available in TensorFlow and ONNX formats. Its aim is to provide developers with quick functionality to hit the ground running by providing a binary HEF file per each pre-trained model and supporting with the Hailo toolchain and Application suite.

With the goal of making it as easy as possible for developers to get up and running with the Hailo AI processors, we have built our own open Hailo Model Zoo repository, which includes:

- Pre-trained models – a large selection, demonstrating both the versatility and high AI performance of the Hailo-AI processors

- Pre-configured build flows – validated and optimized flows to take each network from an ONNX/TensorFlow model to a deployable binary, incorporating models evaluation and performance analysis.

To allow simple evaluation we included in our Model Zoo popular models taken from open-source repositories without modification and trained on publicly available datasets. A link to the model source was also added in case users would like to adjust the model, for instance by training it on their own custom dataset.

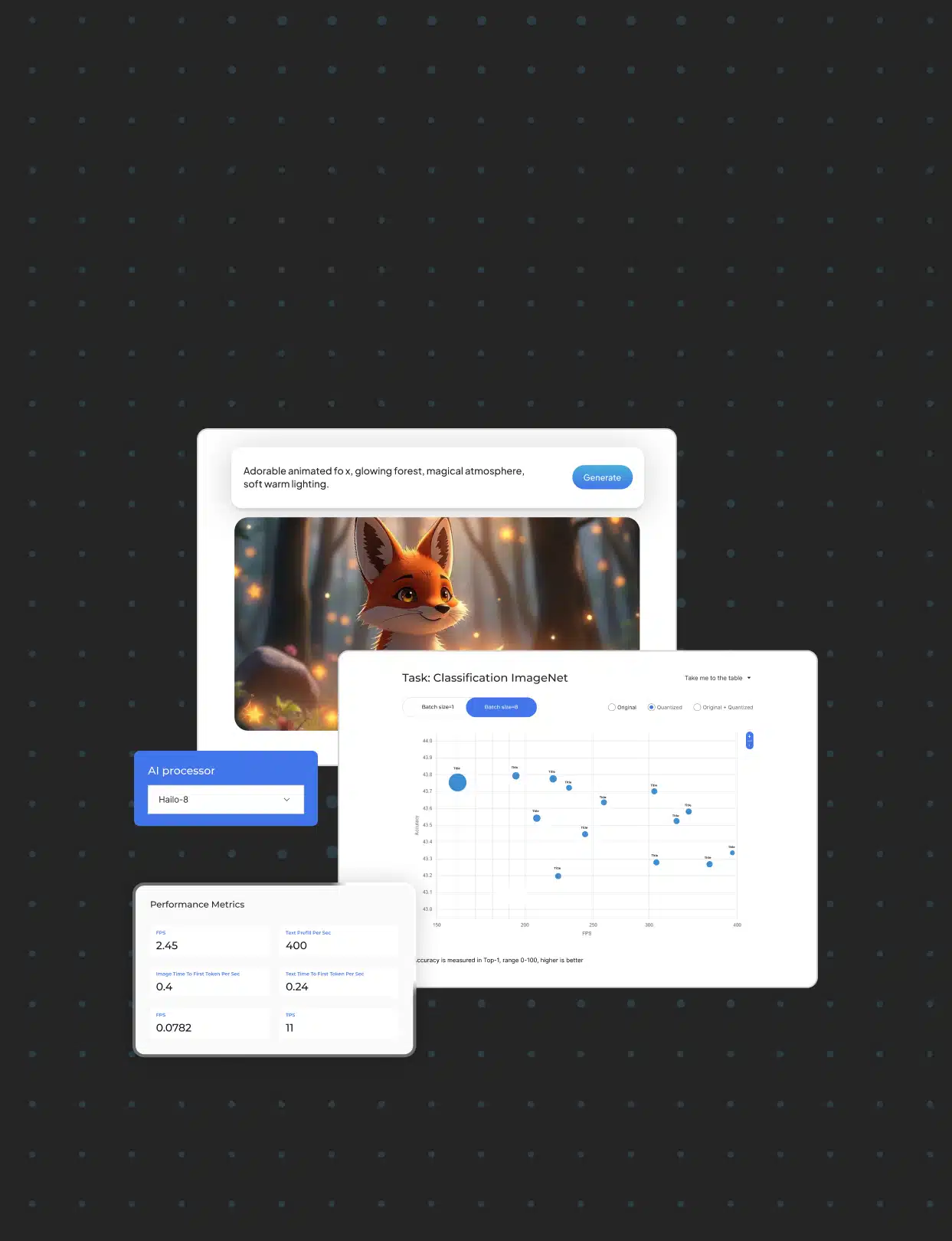

In addition, to help users select the best NN models for their AI applications, Hailo Model Explorer helps users in making better-informed decisions, ensuring maximum efficiency on the Hailo platform. The Model Explorer offers an interactive interface with filters based on Hailo device, tasks, model, FPS, and accuracy, allowing users to explore numerous NN models from Hailo’s vast library.

How It Works

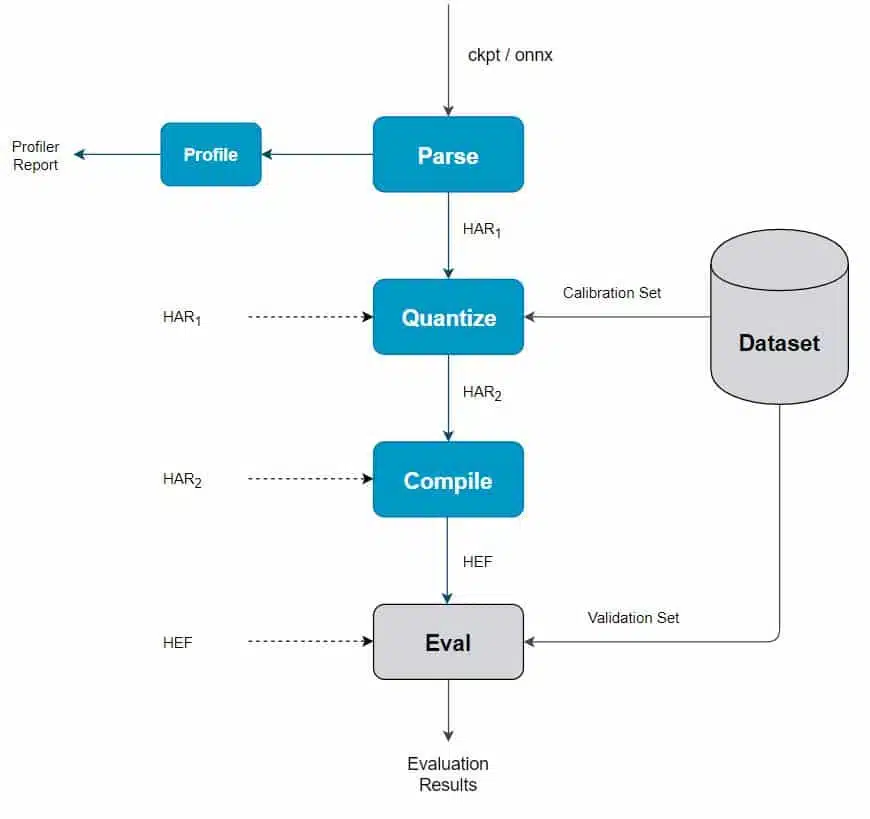

The Model Zoo employs the Hailo Dataflow Compiler for a full flow from a pre-trained model (ckpt/ONNX) to a final Hailo Executable Format (HEF) that can be executed on the Hailo-8. To that end, the Hailo Model Zoo provides users with the following functions:

- Parse: translate Tensorflow/ONNX model into Hailo’s internal representation, which includes the network topology and the original weights of the model. The output of this stage is an Hailo Archive file (HAR)

- Profile: a report that includes a the expected model’s performance on the Hailo-8 including FPS, latency, power consumption and a full breakdown for each layer in HTML format

- Quantize: optimize the model for runtime by converting it from full precision to limited integer bit precision (4/8/16) while minimizing accuracy degradation. This stage includes several algorithms that optimize performance by assuring the accuracy of the quantized model. The output of this stage is a Hailo Archive file that includes the quantized weights

- Evaluate: evaluate the model accuracy on common datasets (e.g., ImageNet, COCO). Evaluation can be done on the full precision model and on the quantized model using our numeric emulator or the Hailo-8

- Compile: compile the quantized model to generate a Hailo Executable Format (HEF) file which can be deployed on the Hailo-8 chip.

In blue are blocks that use the Hailo Dataflow Compiler

The final stage of the Hailo Model Zoo generates the HEF file to be used in the final application. To illustrate, this is where the HEF file of the YOLOv3 object detection network is produced for your ADAS application, or that of the CenterPose (pose estimation) network for a Smart Home camera solution.

The models in our repository were selected to cover a wide variety of common architectures and tasks. It includes popular state-of-the-art architectures, like YOLOv3, YOLOv4, CenterPose, CenterNet, ResNet-50 etc., many of which are already part of our AI benchmarks. To enable easy benchmarking, every pre-trained model is accompanied by its own pre/post-processing functions and dataset acquisition flow to make the evaluation feasible. We wrapped all these functionalities into an easy-to-use package, so it is simple and fast to reproduce, as well as to measure performance across a wide range of networks. With the powerful AI acceleration capabilities of the Hailo-8 AI Processor, users can leverage the Model Zoo to reach exceptional performance for these popular models in edge devices.

What TAPPAS Has to Do with It

Users can integrate the accelerated model from the AI Model Zoo within their own application. Using the Hailo Model Zoo in combination with our TAPPAS High-Performance Application Toolkit allows an edge AI developer to build and deploy a meaningful application in a short time frame. In fact, all the applications in TAPPAS are built on-top of Model Zoo networks.

To take a common use-case as an example, pose estimation allows gaining meaningful insights about shoppers’ behaviors and desires for use in Smart Retail Intelligent Video Analytics (IVA). A user developing this kind of application may find it useful to develop it based on pre-trained pose estimation models like the CenterPose_RegNetX_1.6GF in our model-zoo. Based on the Hailo TAPPAS, the model can then be easily integrated in your application.

Another interesting use case is intelligent traffic monitoring for Smart City applications. You can get the application up and running in no time using the existing precompiled model SSD_MobileNet_v1 VisDrone. In fact, a small team of Hailo engineers did just that at Hailo’s first hackathon in 24 hours!

The Hailo Model Zoo provides a comprehensive environment for edge AI developers to generate their next deep learning application, with out-of-the-box pre-trained models and application solutions for a variety of useful neural tasks. Looking ahead, we will continuously add new state-of-the-art models, cover new tasks and meta-architectures, and improve our pre-configured build flows and optimization processes to help developers build the best possible edge AI applications, leveraging the industry-leading capabilities of the Hailo-8 AI Processor.

To learn more, check out our Hailo Model Zoo

or visit the Hailo Model Zoo Github

Read mode about what we do

Hailo offers breakthrough AI accelerators and Vision processors