DRAM Shortage in Edge AI: Doing More With Less

The shortage in memory components and the soaring prices of DRAM of all sizes is forcing a rethink of AI workloads. By eliminating or minimizing memory requirements, and with guaranteed availability, edge AI offers a more resilient path forward.

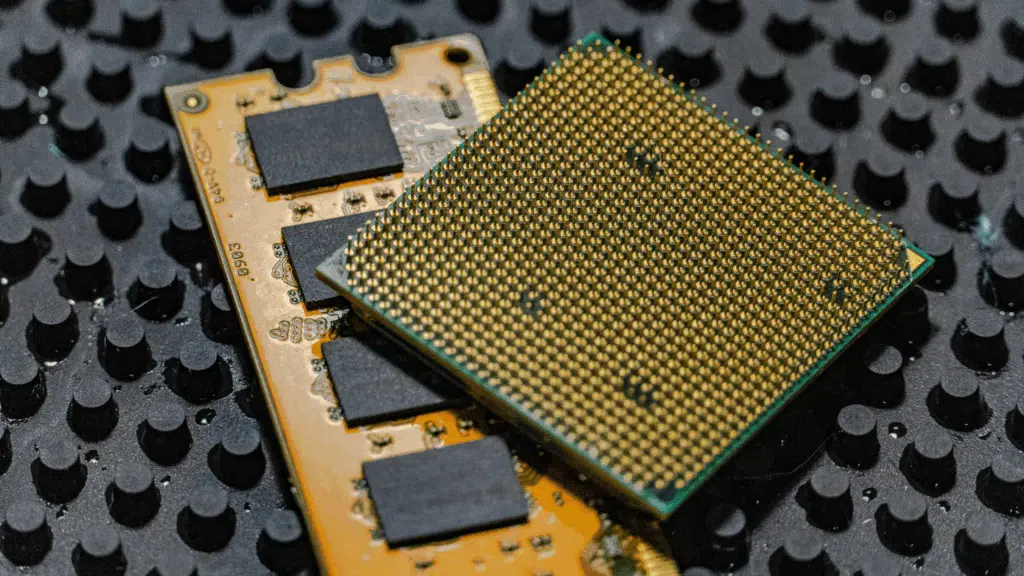

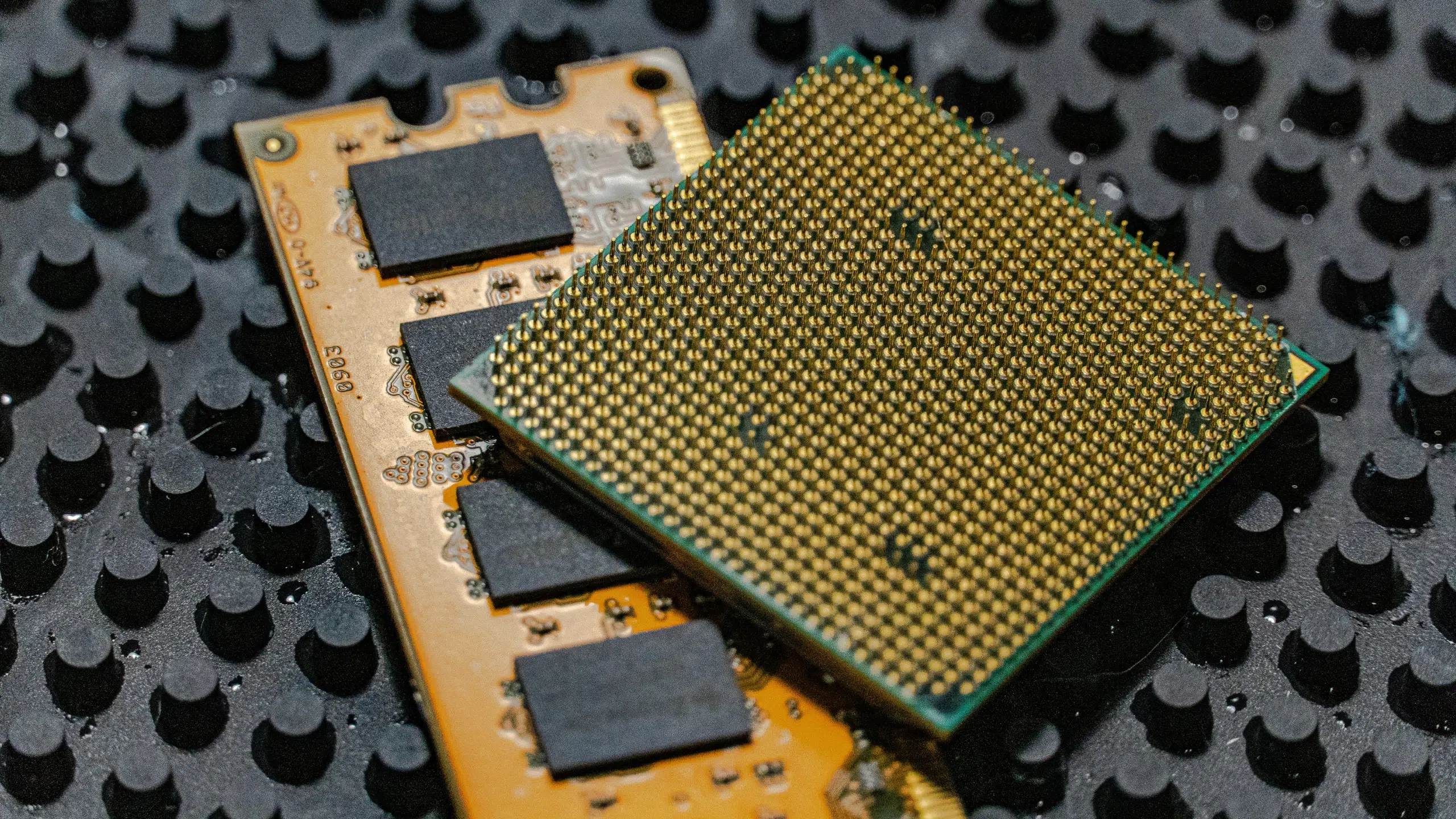

Memory is having a difficult year. DRAM (Dynamic Random-Access Memory) is a high-speed short-term workspace for AI, used extensively to store massive datasets and model parameters, so processors (CPU/GPU/NPU) can rapidly access and manipulate them for AI training and inference. In modern datacenters, DRAM is becoming a critical, and increasingly stressed, resource. As manufacturers prioritize DDR5 and high-bandwidth memory (HBM) to serve datacenters and large-scale AI workloads, availability has tightened and costs have risen sharply: up to 3–4x compared to Q3 2025 levels, and market signals suggest the peak has not yet been reached. While end users may not yet feel the full impact, the cost of AI acceleration (GPUs / NPUs) is expected to rise dramatically in the near future, by as much as $10 per gigabyte of required memory. Even hyperscalers – typically at the front of the line – are reportedly receiving only about 70% of their allocated volumes, and analysts expect tight conditions to persist well into 2026, and possibly even 2027.

This strain is not evenly distributed. As AI datacenters drive demand for large memory footprints, the steepest price increases and longest lead times have been concentrated in higher-capacity DRAM modules. Lower-capacity modules (1–2 GB), by contrast, have remained more accessible and comparatively stable, showing smaller percentage price increases and better availability, supported by a broader supplier base and the entry of new manufacturers into this segment.

This trend is now influencing how teams think about edge system design. AI workloads built around large memory footprints now run into procurement challenges; systems engineered to operate within modest memory baselines avoid both the price spikes and the uncertainty. The outcome is important: in a shortage, architecture built for efficiency gives teams more strategic freedom compared to architectures built for abundance.

The most effective solution during the shortage: DRAM-less AI accelerator

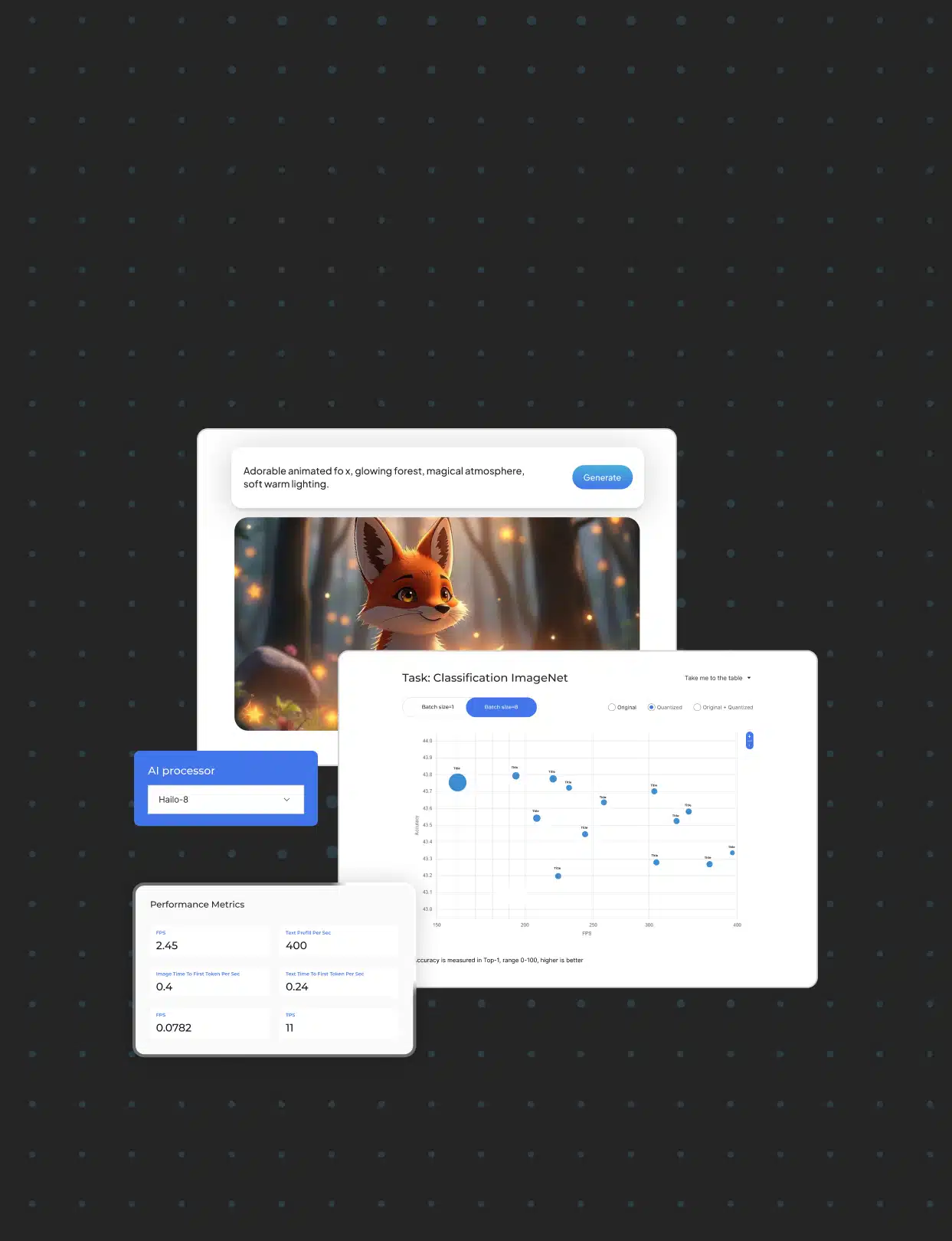

In a constrained memory market, the most robust solution is also the simplest: remove the dependency on external DRAM entirely. For classical and vision AI workloads, this is made possible by Hailo-8 and Hailo-8L AI accelerators.

These are currently the only AI accelerators on the market that deliver high-performance edge AI without requiring an external DRAM. By keeping the full inference pipeline on-chip, Hailo-8/8L eliminate the most expensive and supply-constrained component in the system. In practical terms, avoiding DRAM can reduce bill of materials by up to $100 per device, while also improving power efficiency and system reliability.

| DRAM-Based vs. DRAM-Less AI Accelerators Comparison |

For teams building classical and vision AI applications this architecture is ideal. The robust software suite and vibrant developer community make Hailo-8/8L technically advantageous, and the most robust and cost-efficient AI solution available today.

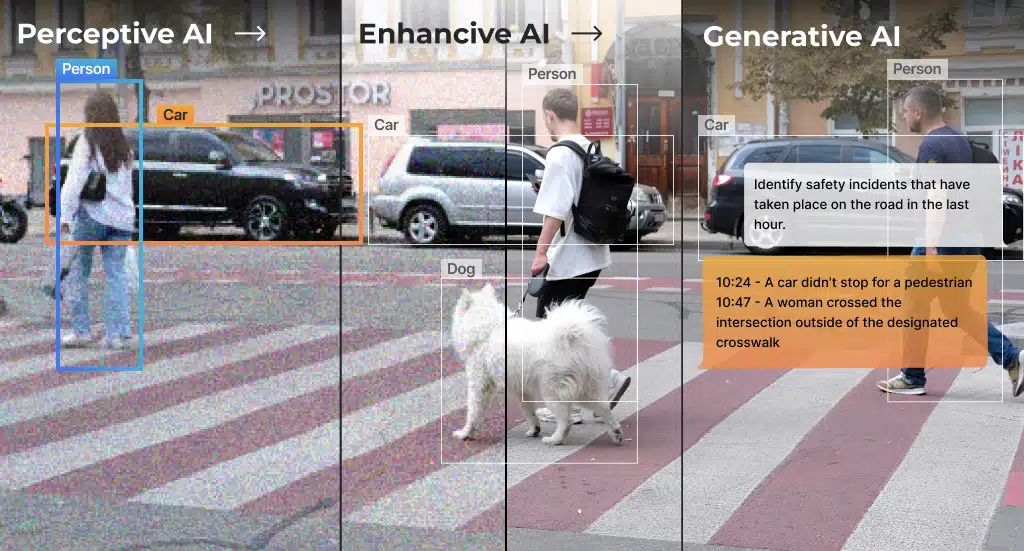

A shift in how (and where) AI runs – A case for GenAI on the Edge

However, not every AI application can avoid DRAM. Generative AI workloads inherently require more memory, and systems that run them will continue to rely on external DRAM. But even in this case, memory constraints strongly favor moving inference closer to the edge. Running generative AI in a memory shortage era, requires teams to work with smaller, domain-specific models rather than large, general-purpose ones. Smaller models translate directly into smaller DRAM requirements, reducing cost, easing procurement, and improving power efficiency.

Until recently, generative AI required the memory and compute scale of the cloud. A new class of smalller LLMs (also called small language models, SLMs) and compact vision language models (VLMs) now deliver strong instruction following, reliable tool use, and competitive benchmark performance at a fraction of the parameters.

New models like QWEN / Llama / DeepSeek now demonstrate how far efficient architectures have come. These models show that some generative AI tasks and applications no longer need massive, expensive system memory but rather well-defined domains, optimized inference paths, and efficient pre-/post-processing.

While the smaller GenAI models can run on the cloud, there are multiple reasons to transition them to the edge. Privacy and latency have long shaped the case for running intelligence on the device. In 2025, another factor cemented it: the expectation that generative AI simply be there. Users now rely on transcription, summarization, audio cleanup, translation, and basic reasoning dozens of times a day – often with no patience for startup delays or network dependency. Recent cloud outages from AWS, Azure and Cloudflare underscored how fragile cloud-only assumptions can be. When the networks faced disruptions, everyday features across consumer apps and enterprise workflows failed. Even brief interruptions highlighted how a single infrastructure dependency can take down tools that users now rely on dozens of times a day.

As AI moves deeper into everyday workflows and users expect agentic AI capabilities to be available instantly, a hybrid approach proves far more resilient. Keep frequently used intelligence local, either on the device or in a nearby gateway, while using the cloud for heavier or less frequent tasks. And crucially, when models are small enough to operate within 1-2 GB of memory, that hybrid approach becomes far easier to implement using memory configurations that are still readily sourced. This is where edge-focused accelerators like Hailo-10H come into play, enabling efficient generative AI inference while keeping memory footprints as lean as possible.

The Path Forward: Building DRAM Resilient AI Systems

If the DRAM shortage proves anything, it’s that the most resilient AI systems are the ones designed around constraints, not excess. Teams are re-evaluating assumptions about model size, memory baselines, and what “good enough” looks like for common tasks. They’re recognizing that domain-specific intelligence often performs better than brute-force scale – especially in environments that demand consistency, privacy, and low power draw.

The approach of AI designed for efficiency rather than abundance fits squarely within the ethos of edge computing. Many high-value physical AI tasks such as human-machine conversation, event triggering, anomaly detection and others, do not require massive AI models and respectively large memory capacity. In narrow domains, compact models can deliver faster, more private and consistent results because they operate with fewer unknowns:

- Cost – eliminating DRAM or using smaller DRAM capacity prevents the inflated pricing of high-capacity DRAM.

- Supply-chain – no DRAM or low-capacity DRAM lowers supply chain risk

- Power consumption – smaller models with hardware-assisted offload (NPU or AI accelerator) run cooler and more efficiently

- System reliability – local inference increases system reliability by keeping essential features available even during network outages

As supply tightness continues, organizations that invest in leaner model design and hybrid deployment strategies will be better positioned to deliver stable, responsive AI without absorbing high memory costs.

Frequently Asked Questions About DRAM and Edge AI

What is DRAM and why is there a shortage?

DRAM (Dynamic Random Access Memory) is the primary working memory in computers and AI systems. The DRAM shortage in 2025 stems from manufacturers prioritizing DDR5 and HBM production for datacenters over other memory types, combined with overwhelming demand from AI infrastructure buildouts. This has caused supply constraints and price increases of 3-4x compared to Q3 2025 levels.

How does DRAM work in AI accelerators?

In traditional AI accelerators, DRAM serves as external memory to store model weights, intermediate activations, and data during inference. The processor repeatedly accesses this external memory, which consumes power and adds latency. However, Hailo’s innovative architecture keeps the entire inference pipeline on-chip, eliminating the need for external DRAM entirely while maintaining high performance.

What is DRAM used for in AI systems?

In AI systems, DRAM is typically used to:

- Store neural network parameters and weights

- Hold intermediate computation results during inference

- Buffer input/output data for processing

- Manage large model architectures during runtime

Higher-capacity DRAM modules (4GB+) are particularly crucial for large language models and complex vision AI applications, which is why the DRAM shortage has hit AI development so hard.

Can AI run without DRAM?

Yes. Hailo-8 and Hailo-8L are the only AI accelerators on the market that deliver high-performance edge AI without requiring external DRAM. By keeping the full inference pipeline on-chip with optimized dataflow architecture, these accelerators eliminate DRAM dependency while maintaining exceptional performance for vision and classical AI workloads.

What are the alternatives to high-capacity DRAM in AI systems?

During the DRAM shortage, several strategies can help:

- DRAM-less architectures: if workload is only vision-related, Hailo-8/8L eliminate external memory needs entirely

- Smaller models: If generative AI is required, use SLMs and compact VLMs that fit in 1-2GB (easier to source)

- Hybrid deployment: Local edge processing with cloud fallback