Multi-Camera Multi-Person Re-Identification with Hailo-8

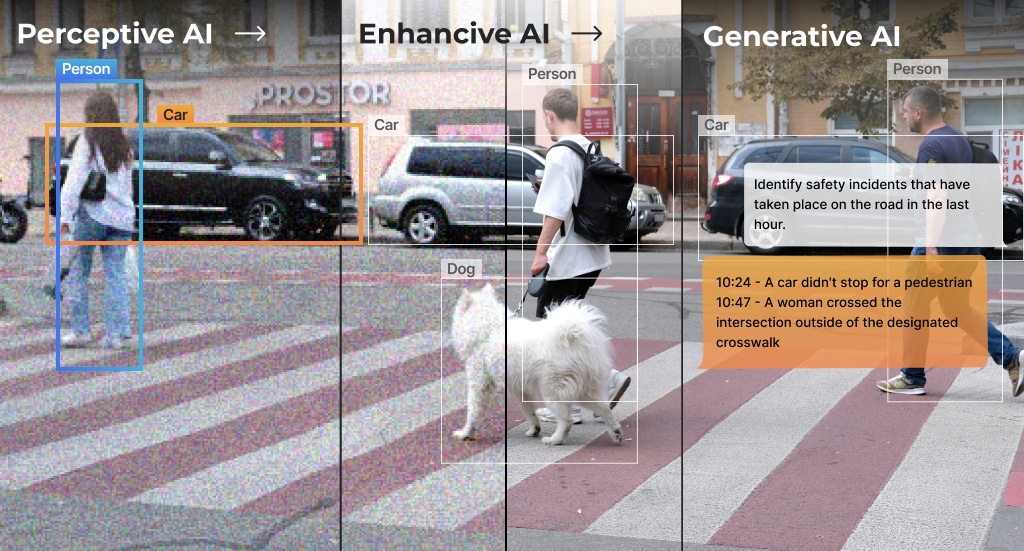

Multi-person re-identification across different streams is essential for security and retail applications, facilitated by multi-camera tracking. This includes the identification of a specific person multiple times, either in a specific location over time, or along a trail between multiple locations. High performance computing is required to achieve this. Hailo-8 AI processor delivers the efficiency needed for accurate real-time multi-person re-identification on edge devices while enhancing video analytics and keeping costs down without compromising people’s privacy. In this blog post, we present an end-to-end reference pipeline to perform this task with precision using multi-camera tracking technology.

Multi-person re-identification tracking on multiple video streams is a common feature in video surveillance systems. The goal of this feature, which is implemented usually by deep learning, is to detect and identify people across different streams and throughout the video. The multi-person re-identification feature is used for safety, security, and data analysis, which reveals valuable information on customers, visitors, and employees’ behavior. Although it is generally considered a widely used technology, occlusions and the different conditions of each camera (such as perspective and illumination), generate a major challenge for accurate tracking.

Advantages of running a multi-camera tracking with multi-person re-identification application on edge devices include:

- Preserve privacy and improve data protection by eliminating the need to send raw video.

- Improve the detection latency which is crucial for real-time alerts.

Hailo-8 is the perfect AI accelerator to deliver accurate real-time person re-identification on edge devices. Its compute power also enables processing many people concurrently with high accuracy which is crucial for high-quality re-identification. Hailo-8 also lowers the system cost with installation and maintenance of a single AI accelerator to process multiple cameras in real-time.

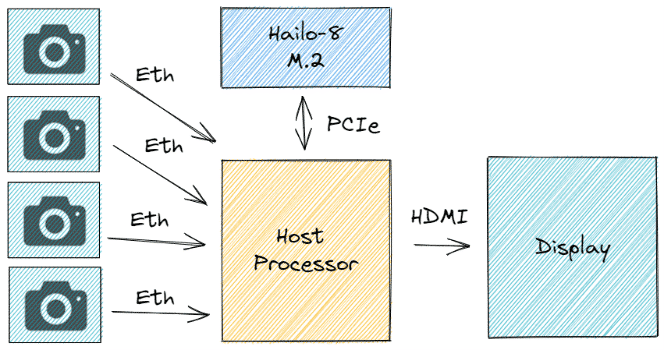

The Hailo TAPPAS multi-camera re-identification and tracking pipeline is implemented using GStreamer on an embedded host and Hailo-8 running in real-time (without batching), with four RTSP IP cameras in FHD input resolution. The host acquires the encoded video over Ethernet and decodes it. The decoded frames are sent for processing on Hailo-8 over PCIe, and the final output is displayed on the screen.

Application Pipeline

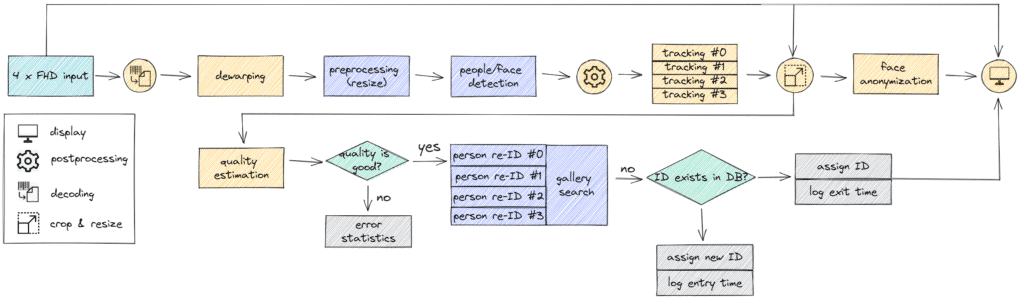

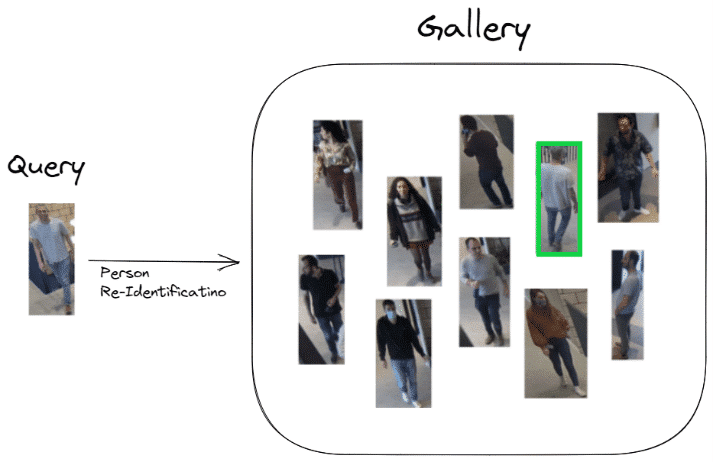

The entire application pipeline is depicted in the following diagram. First, the encoded input is decoded and de-warped to obtain aligned frames for processing. De-warping is a common computer vision component used to eliminate any distortion caused by the camera, for example, the fisheye distortion is common in surveillance cameras. Next, the frames are sent to the Hailo-8 AI processor, which detects all the persons and faces in each frame. We use the Hailo GStreamer Tracker for initial tracking of the objects within each stream. Finally, each person is cropped from the original frame and fed into a Re-ID network. This network outputs an embedding vector that represents each person and can be compared between different cameras. The embeddings are stored in a database called the “gallery” and by searching within it, we assigned the final ID for each person. The final output also includes a face anonymization block which blurs each face to preserve the privacy of the people in the frame.

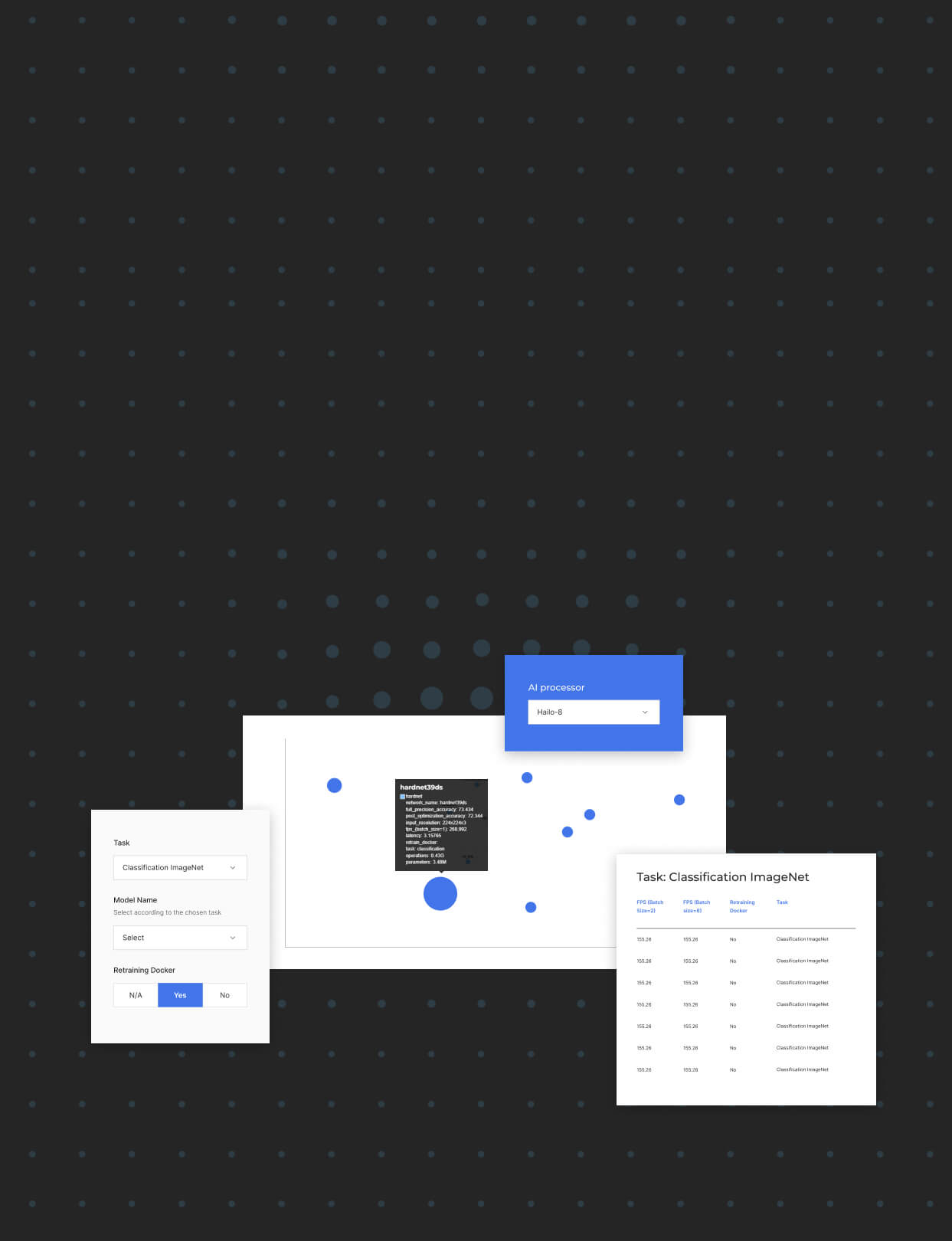

All the neural network (NN) models were compiled using the Hailo Dataflow Compiler and the pretrained weights and precompiled models were released in the Hailo Model Zoo. The Hailo Model Zoo also provides a retraining docker environment for custom datasets to ease adaptation to other scenarios. We note that all models were trained in relatively generic use cases and can be optimized (in terms of size/accuracy/fps) for specific scenarios.

Multi-person/Face Detection

The person/face detection network is based on YOLOv5s with two classes: person and face. YOLOv5 is an accurate single-stage object detector released in 2020 and trained with Pytorch. To train the detection network we curated several different datasets and aligned them to the same annotation format. Note that public datasets, like COCO, Open Images, and so on, may only contain person or face annotations and to use them we generated full annotations for both classes. For face annotations, we used a state-of-the-art face detection model trained on publicly available datasets. Using strong NN, such as YOLOv5, to detect people and faces means we can detect them with high accuracy and great distance; therefore, enabling the application to detect and track even small objects.

| Parameters | Compute (MAC) | Input Resolution | Training Data | Validation Data | Accuracy |

|---|---|---|---|---|---|

7.25M |

8.38G |

640x640x3 |

149k images |

6k images |

47.5mAP*

|

*YOLOv5s network trained on COCO2017 achieves only 23AP on the same validation dataset.

Person Re-Id

The person Re-ID network is based on Rep-VGG-A0 and outputs a single embedding vector of length 2048 per query. The network was trained in Pytorch using the following repo. To improve the Rank-1 accuracy on the validation dataset (Market-1501) we have merged different Re-ID datasets into a single training procedure. Using bigger and more diverse training data (from multiple sources) helped us generate a more robust network that generalizes better to real-world scenarios. In the Hailo Model Zoo, we provide re-training instructions and a full docker environment to train the network from our pretrained weights.

| Parameters | Compute (MAC) | Input Resolution | Training Data | Validation Data | Accuracy |

|---|---|---|---|---|---|

9.65M |

0.89G |

256x128x3 |

660k images |

3368/15913 |

90% Rank1

|

Deploying the Pipeline Using the Hailo TAPPAS

We have released the application as part of the Hailo TAPPAS. The sample application builds the pipeline using GStreamer in C++ and allows you to run from video files or RTSP cameras. Other arguments that allow you to control the application include setting parameters for the detector (e.g., the detection threshold), the tracker (e.g., keep/lost frame rate), and the quality estimation (minimum quality threshold).

The Hailo Model Zoo also allows you to retrain the NN with your own data and port them to the TAPPAS application for fast domain adaptation and customization. The goal of the multi-camera multi-person re-identification application is to provide rapid prototyping and a solid baseline for building a surveillance pipeline on Hailo-8 and the embedded host processor.

As part of the HailoRT (Hailo’s runtime library), we released a GStreamer plugin for inference on Hailo-8 chip (libgsthailo). This plugin takes care of the entire configuration and inference process on the chip which makes the integration of the Hailo-8 to your GStreamer pipeline easy and straightforward. It also enables inference of a multi-network pipeline on a single Hailo-8 chip to facilitate complex pipelines. Another HailoRT component that we have introduced is the network scheduler. This HailoRT component simplifies the usage of running multiple networks on a single Hailo device by automating the network switch. Instead of manually deciding which network runs when, the network scheduler automatically controls the running time of each network. Using the scheduler makes the development of pipelines with Hailo-8 much cleaner, simpler, and more efficient.

Apart from HailoRT, in this application we also introduced the following GStreamer plugins:

- De-warping: GStreamer plugin implemented in TAPPAS that allows you to fix camera distortions. The de-warping is implemented using OpenCV and is currently fixing fisheye distortions.

- Box anonymization: GStreamer plugin implemented in TAPPAS that allows you to blur boxes in an image given a predicted box. For example, face anonymization in an image after predicting all the faces.

- Gallery search: GStreamer plugin that adds a database component to the pipeline. The gallery component allows you to add a new object and search within the database for matches. In this application, we are pushing the Re-ID vectors and comparing them to new vectors to associate predictions between different cameras and timestamps.

As it can be seen the RSC101 effectively supports all required surveillance features in small businesses with 6 cameras and a $500 budget. Actually, the RSC101 performance exceeds the requirements. Utilization of an integrated high compute power AI processor for all the video analytics, state-of-the-art deep learning algorithms could be used which results in high performance and advanced features. The AI compute power of 26 TOPS guarantees future enhancements by supporting migration to state-of-the-art DL algorithms for additional events detection types. Note that the RSC101 capabilities enable it to be used as the best cost-effective solution. It adds support for additional 4-8 cameras to an existing surveillance system when compute power for video analytics is already fully utilized.

Performance

The following table summarizes the performance of the multi-camera multi-person tracking application on Hailo-8 and x86 host processor with four RTSP camera in FHD input resolution (1920×1080) as well as the breakdown of the NN standalone performance.

| FPS | Latency | Accuracy | |

|---|---|---|---|

Full Application |

30 (per stream) |

– |

90% Rank-1 |

Standalone person/face detection |

379 |

5.93ms |

47.5mAP |

Standalone Re-ID |

1015 |

1.77ms |

90% Rank-1 |

* Measured with Compulab (x86, i5)

The Hailo multi-camera multi-person re-identification application presents an entire reference pipeline deployed in GStreamer with Hailo TAPPAS and retraining capabilities for each NN to enable customization with the Hailo Model Zoo. This application gives you a baseline to build your VMS product with Hailo-8. For more information go to our TAPPAS documentation.

This article is a collaboration between Tamir Tapuhi, Amit Klinger, Omer Sholev, Rotem Bar and Yuval Belzer.

Read mode about what we do

Hailo offers breakthrough AI accelerators and Vision processors

Don’t miss out

Sign up to our newsletter to stay up to date