Don’t Make Light of Power Efficiency When It Comes to Edge AI

In the last decade we have witnessed a surging demand for complex computing, more specifically for deep learning. No longer satisfied by centralized processing in datacenters, it is propelling a fast evolution of AI inference at the edge – at the nearest point of aggregation, if not on the device itself. Space, power supply, heat dissipation, cost and/or deployment constraints govern the edge environments, limiting what is possible in terms of applications.

Domain-specific AI processors are redefining the potential of edge devices [1]. These are real game changers, especially when compared to traditional CPU and GPU architectures. What makes them revolutionary? Significantly better processing density per energy unit (power or AI energy efficiency).

Why Power Efficiency Matters

Unlike the virtually unlimited resources of the datacenter, edge deployments create unique constraints for computing hardware, principally power considerations. First, the device’s power source is often limited. Power supply for commercial devices is highly standardized. Common cameras can be limited to as little as 2.5-3W [2, 3] for the entire system – not just processing, but also interfaces, power management and, in some cameras, connectivity and lighting. How much processing can fit into this kind of budget (i.e. how power efficient the processor is) is crucial to the camera’s functionality. Secondly, Devices also have a thermal limit. The operating temperature limit and TDP (thermal design power) determine how much heat the components can generate and the system can dissipate before it fails. The more efficient the processor is, the more it can do without reaching the TDP. Finally, there is often an energy limit at the edge. Some edge devices are battery operated and thus limited in available power supply per charging cycle. The more power it uses, the shorter the duration between recharges, and the more the device’s utility is hindered.

Considering recent deep learning applications, it is noticeable that they are typically characterized by intense compute per processed input. As a result, running such application at high input rates yield high compute demand often measured in TOPS (terra operations per second) of AI processing capacity. For most devices, one or more of following will be true:

- Chosen NN (neural network) is complex and has a lot of computational operations

- System is required to process in real time

- Device needs to perform more than one deep learning task

- Large input signal is required (e.g. high-resolution video stream)

- There is more than one input stream (for instance, video feeds from several cameras or sensors)

Measuring Power Efficiency – Avoid the Obvious Blunders

Power efficiency is a measure for the work the system carries out with a given amount of energy, and therefore can be expressed in terms of the number of operations executed divided by the energy consumed. This is equivalent to the number of operations-per-second (expressed in TOPS) divided by the power consumption (measured in Watt) during execution of the workload at hand. Hence the common measure for power efficiency TOPS/W. In computer vision terms, this can also be expressed as video frames per second (FPS) per Watt. It follows that the power efficiency is a measure that is workload dependent and varies with the specific neural network that is being executed.

There are two common pitfalls:

- Dividing the maximum theoretical compute capacity by the maximum power consumption published by vendors – this result is often misleading and does not reflect the actual capabilities that can be achieved in a given solution. The TOPS figure is usually a theoretical top limit and doesn’t reflect system utilization under real workload which is nearly impossible to reach.

- Generalizing about a processor’s power efficiency based on a single workload – both throughput and power consumption vary from task to task since system utilization, which depends on other factors beyond compute alone varies. Actual throughput and power will not only vary between tasks, but between architectures, software toolchains and even performance goals [4].

Comparing AI Processor Power Efficiency

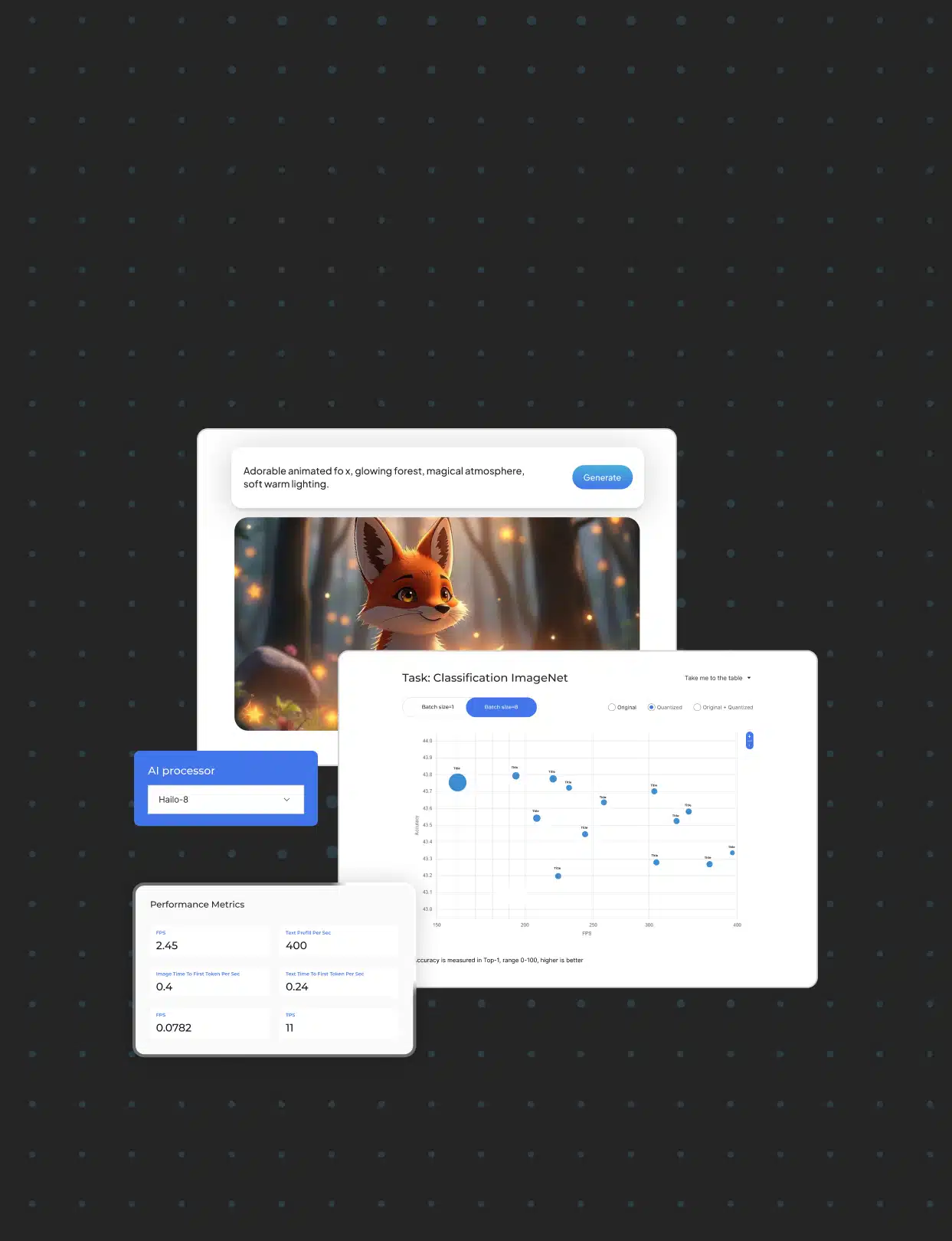

AI processors currently on the market offer a range of capabilities, especially in terms of power efficiency. It is important to use task-specific, actual measurement to evaluate and compare them. To illustrate this, the figure below shows publicly available performance figures (FPS and W) for leading processors on the market on a common industry benchmark neural model – ResNet-50.

Generally speaking, domain-specific processors should have better efficiency than heterogenous-compute SoCs. The latter usually have an efficient neural core whose neural efficiency is “weighed down” by the far less efficient CPU and/or GPU, while the former have purpose-built neural architectures optimized for high efficiency. Domain-specific architectures also vary. However, processors and architectures vary in the relative performance and efficiency gains they offer over customers’ existing computing solutions. Generally speaking, domain-specific processors should have better efficiency than heterogenous-compute SoCs. The latter usually have an efficient neural core whose neural efficiency is “weighed down” by the far less efficient CPU and/or GPU, while the former have purpose-built neural architectures optimized for high efficiency. Domain-specific architectures also vary. However, processors and architectures vary in the relative performance and efficiency gains they offer over customers’ existing computing solutions.

The best way to compare AI processor performance is to run and measure throughput and power consumption for the minimal system that is able to process the neural network, end to end, including all its relevant components (mainly memory). It is important to evaluate the processors using well-defined and preferably commonly used neural models, eliminating any divergence in major configurable processing parameters (input resolution, precision, batch size, hardware set up, etc.). Unfortunately, many of these considerations are often unclear in publicly available benchmarks and require closer scrutiny in the initial evaluation process.

In conclusion, it is crucial to measure and compare neural processing power efficiency based on actual measured throughput and power on a specific task, as general processor power efficiency is not a reliable, meaningful measure of the chip’s capabilities. To make an informed decision when choosing the most power-efficient chip, it is important to broadly consider architectural characteristics (e.g. heterogenous SoC vs. purpose-built AI accelerator) and performance limitations (e.g. maximum TOPS and power consumption). More than providing the highest throughput per unit of power, a truly energy efficient AI processor should be consistent in its efficiency, that is maintain the same level of power efficiency as workloads vary and scale up and down. More about power efficiency and its connection to platform scalability in our coming blogs. Stay tuned!

Read more in our Practical Guide to Edge AI Power Efficiency white paper

This is the first installment in our Edge AI Power Efficiency series. Subscribe to the Hailo Edge AI Blog to stay tuned!

Sources and Remarks

[1] Norman P. Jouppi, Cliff Young, Nishant Patil, David Patterson, A Domain-Specific Architecture for Deep Neural Networks, Communications of the ACM, September 2018, Vol. 61 No. 9, Pages 50-59, https://cacm.acm.org/magazines/2018/9/230571-a-domain-specific-architecture-for-deep-neural-networks/fulltext [2] According to a a 2019 survey, a domestic CCTV system that includes a DVR (digital video recorder) and 4 cameras consumes, on average, up to 24W. This would mean, roughly speaking, 2.5-3W per camera and 10-15W for the DVR. [3] Andrei, Horia & Ion, V. & Diaconu, Emil & Enescu, A. & Udroiu, I.. (2019). Energy Consumption Analysis of Security Systems for a Residential Consumer. 1-4. 10.1109/ATEE.2019.8725002. [4] For instance, some architectures allow a tradeoff of throughput, power consumption and latency, so the developer can choose to lower throughput to achieve lower latency on a limited power budget or to maximize FPS and latency by elevating power consumption. [5] Performance for Resnet-50, 224 x 224, batch size = 1, is specified in the following table:| Vendor | AI Processor | Resolution | Top-1 Accuracy | Batch | FPS | Power (W) |

| Hailo | Hailo-8 | 224×224 | 74.9% | 1 | 1223 | 3 |

| Intel Movidius | Myriad X | 224×224 | 74.6% | 1 | 29.0 | 2.5 |

| Nvidia | Jetson Nano | 224×224 | 74.6% | 1 | 37 | 10 |

| Nvidia | Xavier AGX | 224×224 | 74.2% | 1 | 358 | 12 |

Sources for Nvidia published specs and benchmarks:

Jetson Xavier AGX: https://developer.nvidia.com/embedded/jetson-agx-xavier-dl-inference-benchmarks ; https://developer.nvidia.com/embedded/jetson-agx-xavier

Jetson Nano: https://developer.nvidia.com/embedded/jetson-benchmarks

For Intel Myriad X, see: https://docs.openvinotoolkit.org/latest/openvino_docs_performance_benchmarks.html#increase_performance_for_deep_learning_inference

Read mode about what we do

Hailo offers breakthrough AI accelerators and Vision processors