Intelligent Video Analytics: A New Generation of Video Analytics Enabled by Powerful Edge AI

Intelligent Video Analytics (IVA) have penetrated many applications across industry verticals. Starting from on-premises server-based capabilities, then becoming available on the cloud (relying on high-bandwidth connectivity) and, more recently, on edge devices. In this respect, “edge” includes intelligent cameras, intelligent NVRs (Network Video Recorders) and small dedicated appliances (often referred to as edge AI boxes or video analytics boxes) with built-in AI processing.

Today, AI-based on-device analytics solutions abound and their number is growing fast. Research leader Omdia estimated that in 2021, 26% percent of cameras and NVRs sold had AI capabilities. They forecast that by 2025 this share will reach over 60%, with AI-capable cameras making up 64% of all IP camera shipped worldwide. Edge AI is now entering the mainstream market and becoming widely available in mid-range solutions.

Edge AI has made it possible for cameras and other small devices to recognize objects and people, track movement and even identify behavior. However, these capabilities first arrived at the edge in a limited capacity and, as it happens with many budding technologies, disappointed users with lackluster performance: low-quality detections and tracking and unreliable alerts.

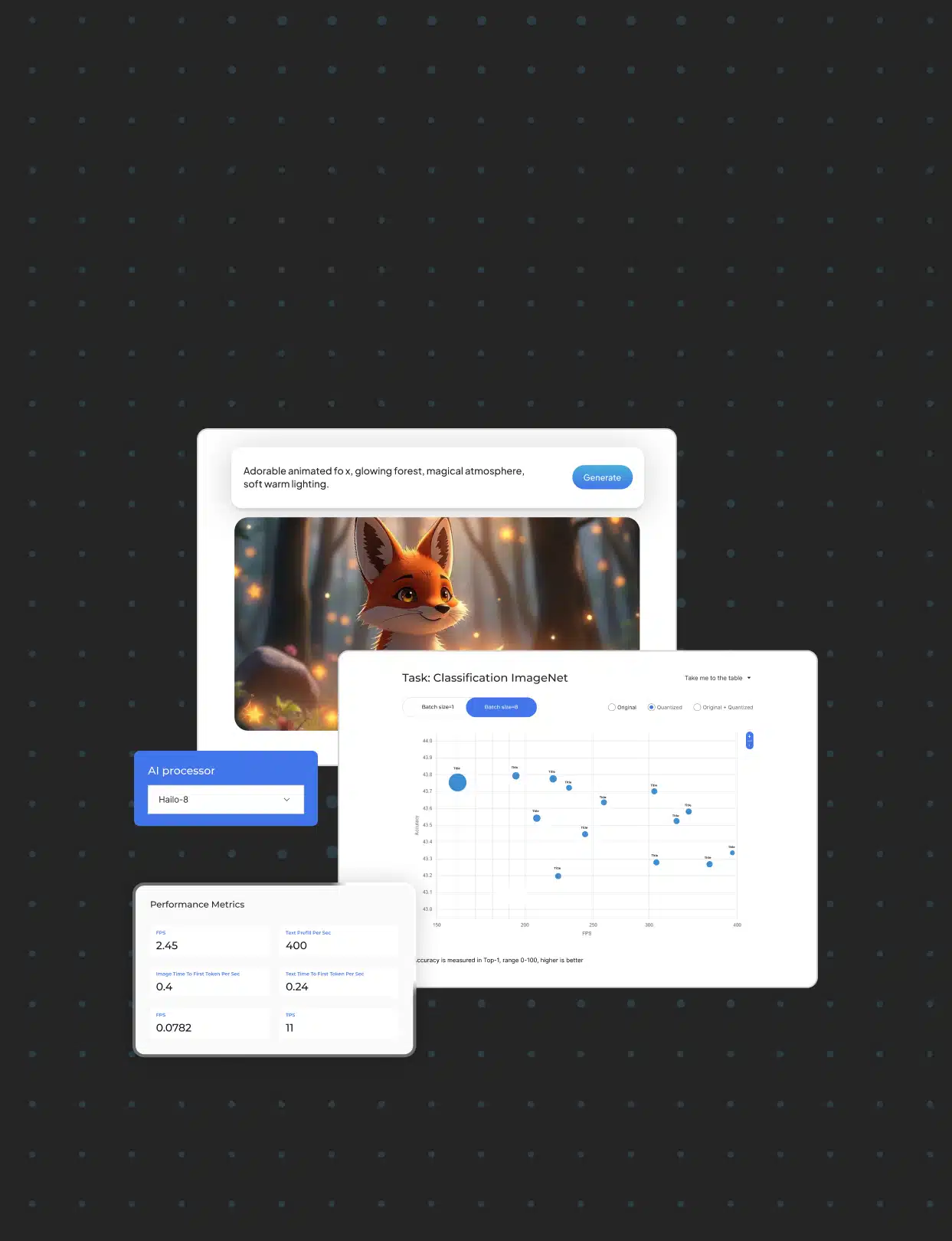

Analytics quality, which has gradually improved since those early days, is currently taking a big leap forward. A new generation of AI SoCs is starting to see adoption and bringing more powerful, data center-level processing capabilities to the edge. Why do you need more AI TOPS (tera operations per second) on your camera or device? What is the point of being able to run a neural network (NN) model at thousands of FPS per second? Let’s dig in and see.

“An object detector running at 1000fps can process 33 concurrent low-resolution streams in real time. However, we do not have to assume that the streams come from different sources. In fact, they can all be from the same high-resolution stream!… [or] we can choose to process 4 streams at HD resolution (1280×720)… The main idea is that our basic object detector running at very-high FPS affords us a budget of pixels-per-second which we can use as we see fit.”

Mark Grobman, Hailo’s ML CTO in his blog post about why anyone would need an object detector running at 1000fps. Read it here

What More Powerful AI Means for Cameras and Devices

On the device level, higher processing throughput coupled with better power efficiency (i.e. an increase in the processing power that is accompanied by lower power requirements) means:

- Low latency and higher frame rates – you are able to run AI tasks in real time frame rate. You don’t have to settle for 10, 15 or 20 FPS if your application requires more. This is important for mission critical applications, for detecting and tracking fast-moving objects and for responsive user-friendly automated systems and interfaces.

- Higher accuracy and reliability –

- The higher the video resolution, the greater the processing throughput is required, so high-resolution (FHD, UHD) cameras that are used to detect small details accurately require more TOPS

- Greater processing power allows applications to use large, high-accuracy state-of-the-art NN models. Models have been getting larger and more complex to, among others, improve output accuracy. The first generation of edge AI processors and accelerators (not to mentioned GPU-based edge products) has been increasingly limited in its ability to use such models. At least not without a noticeable degradation in accuracy.

- Both high-resolution cameras and high-accuracy AI translates, for example, into better detections and classifications, resulting in more reliable alerts.

- More robust capabilities, richer applications – more processing power lets you detect a greater number of objects and better identify them, as well as use multiple NN models simultaneously. Your capacity isn’t exhausted by line crossing, motion detection that captures objects moving at up to 30 mph, or rudimentary object detection capable of identifying up to 10 types of objects. You can do all of these and then some. Today, most useful IVA applications don’t just use the one model, but rather combine several to produce a more meaningful insight.

- System-level cost-savings – more powerful on-camera IVA can cover more RoI (region of interest) more effectively. With the capability to process high resolution streaming video, users can deploy a higher-resolution or a multi-imager camera instead of multiple fixed, low-resolution ones to cover the same RoI. You can use fewer NVRs or analytics devices when each connects and processes a larger number of cameras. You can also reduce even further the amount of video you store and transmit via high-bandwidth connectivity because the metadata and analytics insights produced on-device are so much richer.

What is more, users are able to achieve a better combination of the above. There is always a tradeoff between resolution, frame rate, model/s size (or analytics application complexity) and solution cost. Getting a significantly larger pool of resources diminishes the compromise you have to make. For instance, you can run meaningful analytics on multiple high-resolution video streams, simultaneously and at real time framerate.

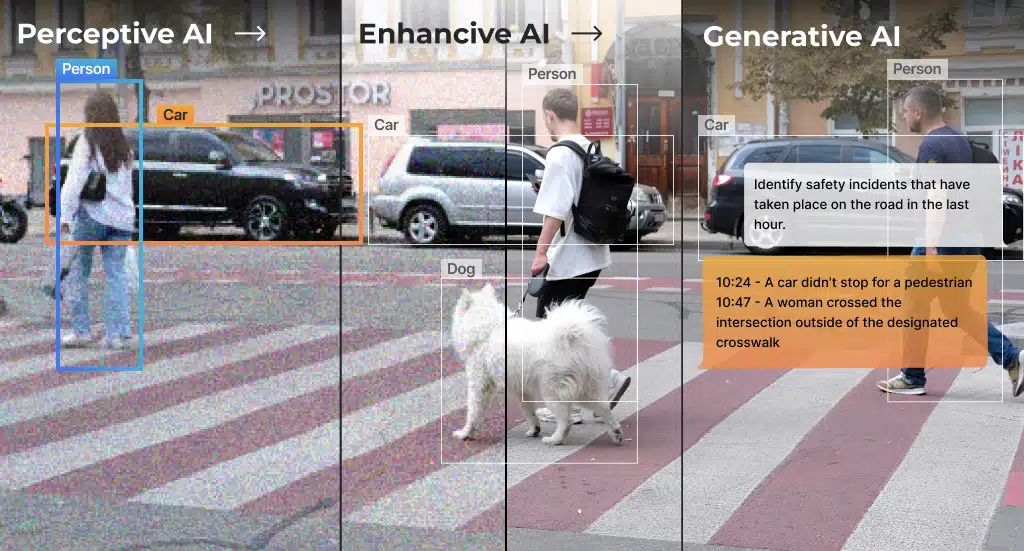

Next-Level Intelligent Video Analytics

Powerful edge AI enhances existing AI-enabled IVA applications and makes possible the emergence of new ones.

Besides improving accuracy and enhancing functionality of existing analytics, these more robust processing capabilities can be leveraged to go from simple object and motion detection to scene understanding – a more accurate and metadata-rich view of the RoI, as well as context awareness – a perception and understanding of the static image or single detected object in a spatial and temporal context. This is where behavior analysis, intent analysis, emotion detection and other complex applications are made possible.

This is also where we go to a greater degree of automation or operator interaction is minimized. Low latency and multi-taking with robust neural workloads is a prerequisite of closed-loop control applications such intelligent checkout and POC, robotics and HMI (human machine interaction) and ADAS/AV (autonomous and assisted driving). These are applications where safety and user experience cannot wait hundreds of milliseconds for the processing to be concluded and the machine to respond. Finally, the extensive metadata that can be produced using all this AI processing throughput empowers a whole slew of Business Intelligence (BI) applications, performed on top of the basic level of real-time intelligent security monitoring.

Let’s Get Practical: More Powerful Edge AI Use Cases

Home Security Premises Monitoring

Enhancing geofencing or line crossing: in first-generation analytics you would use manual annotation to mark the restricted or guarded area. More powerful on-camera AI can make the camera “self-sustainable” and avoid the need for a closed loop with VMS (video management system) just to handle the camera. It is able to follow the curvature of a driveway, to overcome occlusions or adjust to camera shift (cameras can vibrate and move from the fixture) using methods such as semantic segmentation.

Enabling automated video indexing: the camera automatically logs events and creates an activity-based searchable log based on its understanding of the types of areas in its FOV, the type of activity you’re interested in and what irregular/suspicious activity is. This is based on very rich metadata that is produce by multiple NN models running simultaneously, possibly on high-resolution video and in parallel with AI-based image processing.

Retail Security and Analytics

Enhancing store occupancy monitoring: today, monitoring footfall and shoppers going in out of the store is done using line crossing detection. A more powerful AI camera allows to monitor more accurately (no missed counts) based on depth estimation and blind spot recovery. Moreover, automated entry point detection is made possible, which is useful in cases where the entry point is dynamic or not clearly visible / defined (e.g. there are no doors).

Enabling Intelligent Retail Analytics: powerful AI cameras and devices can provide BI in addition to regular ongoing security monitoring. Important business insights can be generated based on customer trajectory extraction (where did the customer head, pause? what did they skip? what caught their eye?), customer intent projection and auto-detection of multiple virtual cross points (for instance, for generating store heatmaps). This is a whole new type of analytics becoming available to physical retailers. You can read more about this and other emerging Smart Retail applications in on our blog.

Enhancing loss prevention: fully or partially automate loss prevention monitoring by store staff and generate more reliable results (fewer misses and false positives). This is made possible by the advanced entry and exist monitoring capability, customer behavior monitoring and intent projection. BI insights can also contribute, helping identify more vulnerable products and locations in the store for increased monitoring (i.e. product-aware protection).

Privacy Is Key

With heavy regulatory activity around it, privacy is considered the leading inhibitor for massive deployment of IVA. Masking unwanted video content is becoming fundamental privacy feature on cameras and other devices, but traditional static masking doesn’t provide privacy and even blocks necessary information. It may also be limited by quantity of people in the frame, with the N-th person not being properly masked because the system’s processing limit was reached.

It might be counter-intuitive, but a more powerful edge AI camera can actually improve user protection. Powerful edge AI is enhancing the ability to guarantee privacy by enabling smart dynamic privacy masking. This on-camera, content-aware masking inevitably avoids any trace of raw content before reaching non-volatile storage. That is, the user can collect the necessary behavior data and create a profile without identifying the customer. The PII (personal identifiable information) does not reach the VMS and is not stored in any way. This capability requires high-resolution, real-time processing that can accurately trace dynamic content on the fly.

Additionally, enabling full-fledged AI capabilities on edge devices minimizes the need to go off-device. If you don’t need to store hours of video footage or send the video stream to the cloud for video analytics, there are fewer data security and customer privacy vulnerabilities and points of failure.

We have entered the age of more capable, accurate and reliable on-device IVA. Cameras and devices that are empowered to perform complex AI processing are getting more accessible and affordable. According to Omdia, “AI analytics will become as ubiquitous as megapixel cameras are in the video surveillance market of the future. Powerful edge AI analytics will be a game changer for video surveillance systems across industry verticals – from security and Smart City, to brick-and-mortar retail and manufacturing.”

Read mode about what we do

Hailo offers breakthrough AI accelerators and Vision processors