Automatic License Plate Recognition with Hailo Processors

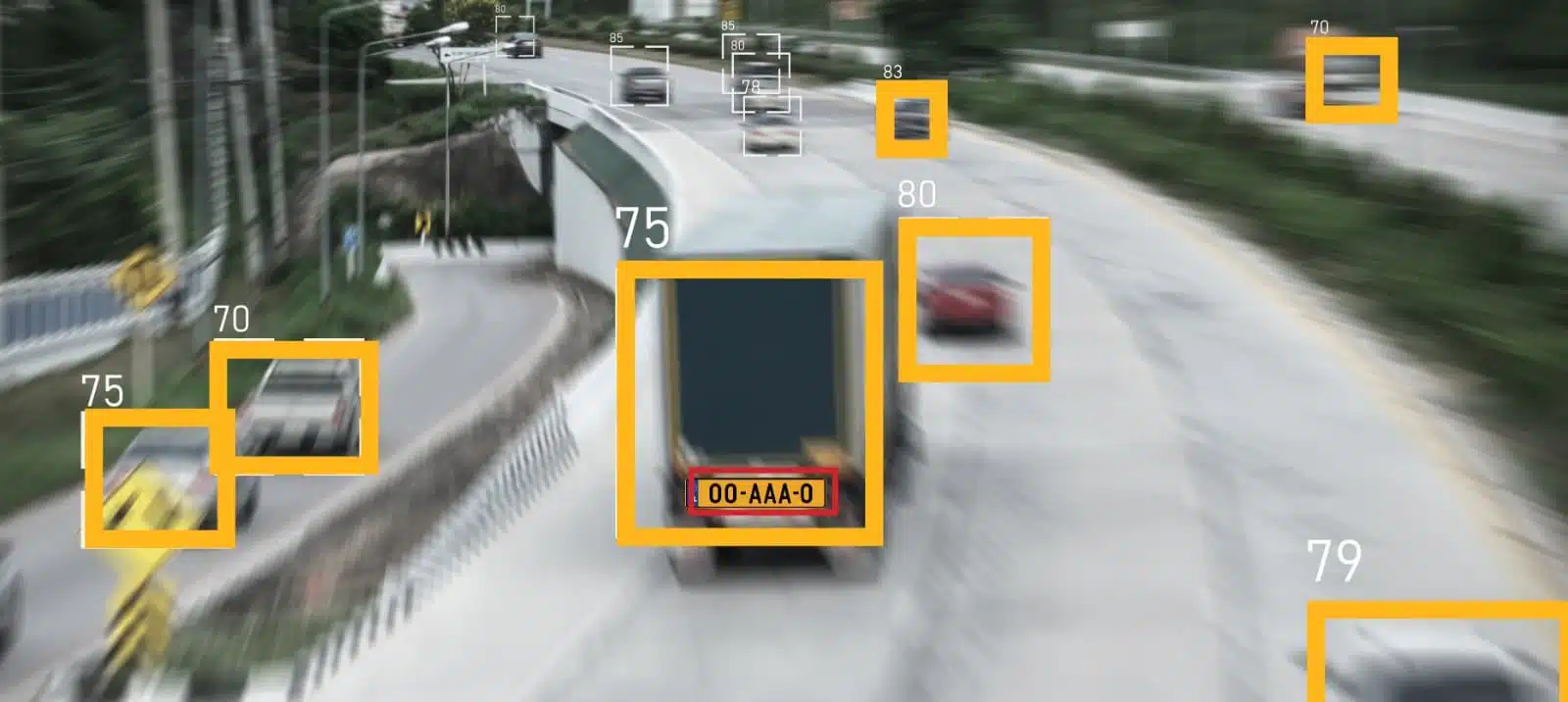

ALPR, or Automatic License Plate Recognition, is a technology that uses cameras and specialized software to automatically capture images of vehicle license plates and convert the alphanumeric characters into digital data. This data can then be instantly compared against various databases for a multitude of purposes. In this blog post, we present Hailo’s Automatic License Plate Recognition (ALPR) implementation (also known as License / Number Plate Recognition or LPR / NPR).

ALPR is a ubiquitous pipeline found in nearly all outdoor deployments. It is commonly used in two scenarios: integrated directly within the camera itself, or running on a ruggedized processing device connected to one or more cameras. This ALPR solution is ideal for Intelligent Transportation Systems (ITS) as well as law enforcement systems, and demonstrates how Hailo empowers real-life machine learning deployment in AI-based products. This blog post focuses on the second scenario, showcasing a complete, deployable AI pipeline based on Hailo-8. The first scenario, where LPR runs directly on the camera, can be implemented with our Hailo-15 high-performance vision.

The hardware configuration described here includes a full HD camera, a camera processor module, a Hailo-8 AI M.2 module and a GStreamer application integrating the Computer Vision (CV) pipeline with multiple neural networks.

Understanding Automatic License Plate Recognition

Automatic License Plate Recognition (ALPR) system is one of the most popular video analytics applications for smart cities. Deployed on highways, toll booths, and parking lots, ALPR enables rapid vehicle identification, congestion control, vehicle counting, law enforcement control, automatic fare collection, and more.

With a powerful edge AI processor like Hailo-8 and Hailo-15, ALPR can be deployed on edge devices and run in real-time, which is crucial for:

- Reducing miss-rates with better performing neural networks (neural networks) that are more resilient to a wide range of scenarios.

- Reducing detection latency.

- Lowering Total Cost of Ownership (TCO) compared to existing systems, including installation and maintenance costs.

- Enhancing data protection and privacy by eliminating the need to send raw video to external / cloud servers.

The high compute power of Hailo-8 and Hailo-15 also enables concurrent detection of multiple vehicles at long distances and with high accuracy. The accuracy of traditional object detection networks tends to decrease significantly (by up to 5x) when dealing with small objects. For instance, a vehicle 100 meters away occupies only a few hundred pixels in a Full HD frame, requiring a high-capacity neural networks to ensure reliable detection. This is a common challenge for ALPR systems, and the Hailo processors offer a high compute capacity to address it efficiently.

In the sample application described in this blog, the Hailo-8 M.2 module with 26 Tera Operations per Second (TOPS) was integrated with i.MX8, a common entry-level embedded AI processor, to demonstrate the ability to offload and decouple the computationally intensive ALPR task. This configuration runs in real-time without batching. While this setup uses i.MX 8, the Hailo-8 processor can be integrated with other host processors, both x86 or ARM-based. Furthermore, the Hailo-15 VPU provides a self-contained solution, eliminating the need for an external host processor.

The Hailo AI TAPPAS ALPR system was implemented with GStreamer on Hailo-8 M.2 card and Kontron’s pITX-i.MX 8M with NXP’s i.MX 8 processor running in real-time (without batching) with a USB camera in FHD input resolution.

ALPR Application Pipeline

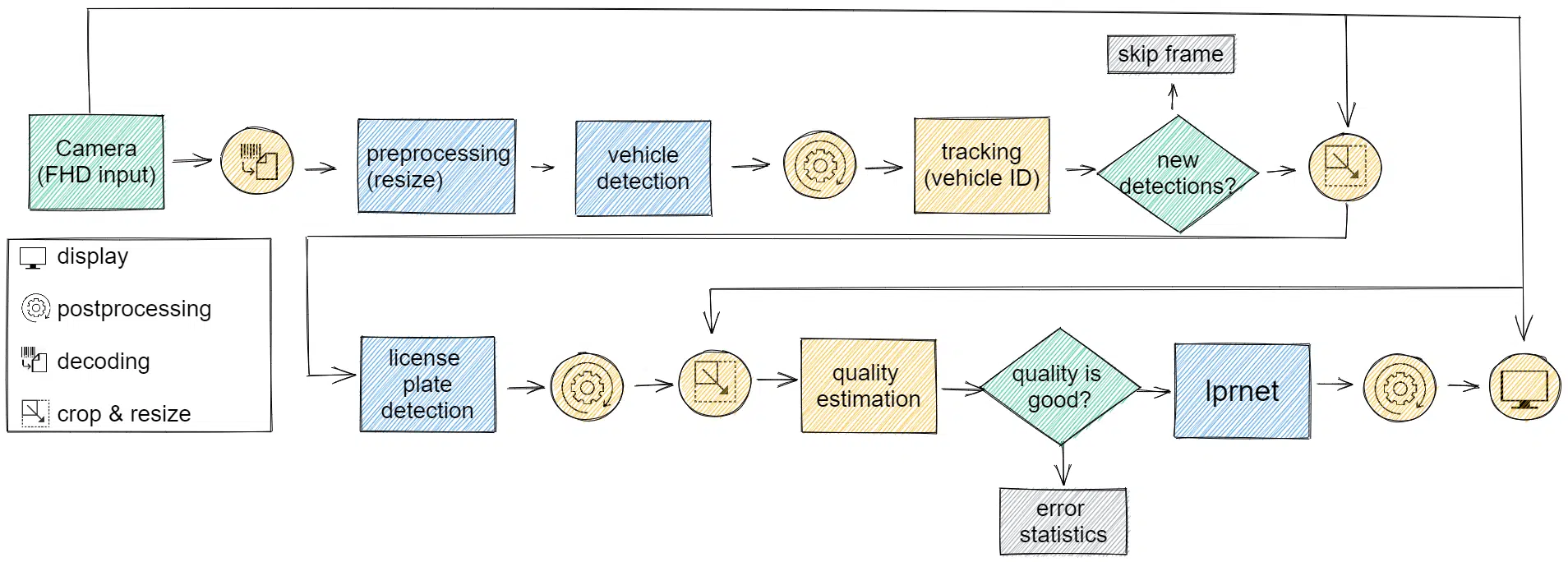

The Hailo ALPR application pipeline is depicted in the diagram below. The pipeline includes three neural networks running on the Hailo-8 AI accelerator device for (1) vehicle detection, (2) license plates detection (locates license plates within detected vehicles), and (3) character recognition on the license plate by LPRNet. The entire pipeline is bult using the GStreamer framework.

To optimize the application latency, the Hailo GStreamer Tracker was used to avoid running unnecessary calculations on vehicles that are already being recognized and quality estimation to avoid running the LPRNet on blurred license plates. The pipeline was designed to meet the challenging requirement of running in real-time for 1080p input resolution with multiple vehicles in each frame.

All the neural networks were compiled using the Hailo Dataflow Compiler. Pre-trained weights and precompiled models are available in the Hailo Model Zoo for easy integration. Additionally, the Hailo Model Zoo supports retraining models on custom datasets for optimal performance in specific environments. Note that all the provided neural networks were trained on relatively generic use cases and can be further optimized (in terms of size/accuracy/fps) with dedicated datasets.

Blue blocks represent elements running on the Hailo-8 device.

Orange blocks represent elements running on the embedded host processor.

Vehicle Detection

For vehicle detection, in this sample pipeline, we used a neural network based on YOLOv5m with a single class that aggregates all types of vehicles. YOLOv5 is a powerful single-stage object detector released in 2020 and trained with PyTorch. The images received from the camera are in 1080×1920 resolution and were resized to 640×640 before processing by the YOLOv5m. The resize didn’t affect the vehicle detection accuracy. To train the vehicle detection neural network, we combined several datasets and aligned them to the same annotation format. It is important to note that different datasets may categorize vehicles differently, and this neural network was trained to detect all kinds of vehicles to the same class. Using high-capacity neural network, such as YOLOv5m, enables vehicle detection with very high accuracy at great distances, allowing the application to detect and track vehicles even on highways.

While the blog details an implementation of ALPR pipeline based on YOLOv5m, it is recommended to consider advanced YOLO models e.g., YOLOv8s and YOLOv10s from Hailo Model Zoo and evaluate their accuracy and characteristics using the Hailo model explorer to find the best fit.

| Parameters | Compute (GMAC) | Input Resolution | Training Data | Validation Data | Accuracy |

|---|---|---|---|---|---|

Accuracy |

25.63 |

640x640x3 |

370k images (internal dataset) |

5k images (internal dataset) |

46.059AP |

*YOLOv5m network trained on COCO2017 achieves 33.9AP on the same validation dataset.

License Plate Detection

Our license plate detection neural network, in this sample, is based on Tiny-YOLOv4 with a single class. Tiny-YOLOv4 is a compact single-stage object detector released in 2020 and trained with the Darknet framework. Although its accuracy on the COCO dataset is modest (19mAP), we found it sufficient for detecting license plates in single-vehicle images. To train it, we used various license plate datasets and negative samples (images of vehicles without license plates).

| Parameters | Compute (GMAC) | Input Resolution | Training Data | Validation Data | Accuracy |

|---|---|---|---|---|---|

5.87M |

6.8 |

416x416x |

100k images (internal dataset) |

5k images (internal dataset) |

74.083AP |

LPRNet

LPRNet is a Convolutional Neural Network with variable-length sequence decoding driven by connectionist temporal classification (CTC) loss that was trained with Pytorch. This neural network was trained using mostly autogenerated synthetic datasets of Israeli license plates, making it suitable for recognizing license plates with numbers only. To adopt LPRNet to other regions, we recommend using a mix of synthetic and real datasets that represent the license plates of that region and change the number of classes if needed (for example, add a different alphabet). In the Hailo Model Zoo, we provide re-training instructions and a Jupyter notebook that shows how to generate the synthetic dataset that was used for training the LPRNet.

| Parameters | Compute (GMAC) | Input Resolution | Training Data | Validation Data | Accuracy |

|---|---|---|---|---|---|

7.14M |

36.54 |

75x300x3 |

4M images (internal dataset) |

5k images (internal dataset) |

99.86%* |

*Percentage of license plates that are fully recognized (from the entire validation dataset)

Deploying ALPR using the Hailo TAPPAS

We have released the ALPR application sample as part of the Hailo TAPPAS. The ALPR sample application builds the pipeline using GStreamer in C++ and allows developers to run the application either from a video file or a USB camera. Other arguments that allow you to control the application include setting parameters for the detectors (for example, the detection threshold), the tracker (for example, keep/lost frame rate), and the quality estimation (minimum license plate size and quality thresholds).

The Hailo Model Zoo also allows you to re-train the neural network with your own data and port them to the ALPR TAPPAS application for fast domain adaptation and customization. The goal of the Hailo ALPR application is to provide a solid baseline for building APLR product by implementing the full ML application pipeline on the Hailo-8 and an embedded host processor for edge deployment.

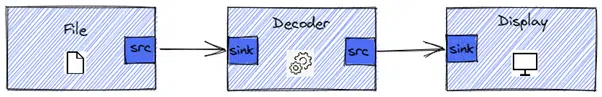

What is GStreamer?

GStreamer is an open-source media framework aimed at developing powerful and complex media application pipelines. The GStreamer pipeline is constructed by connecting different GStreamer plugins together. Each plugin is responsible for certain functionality, and the combination of all of them creates the full pipeline. For example, a simple GStreamer pipeline to display a video file would include a plugin to handle the file read, a second plugin to decode the format of the file, and a third plugin to display the decoded frame. Each plugin declares its inputs (sinks) and outputs (sources), and the framework generates the full pipeline utilizing its LEGO-like building blocks.

Hailo’s GStreamer Support

As part of the HailoRT (Hailo’s runtime library), we release a GStreamer plugin for AI inferencing on the Hailo-8 AI processor (libgsthailo). This plugin takes care of the entire configuration and inference process on the device, which makes the integration of the Hailo-8 to GStreamer pipelines easy and straightforward. It also enables inference of a multi-network pipeline on a single Hailo-8 to facilitate a full ML system.

Except for the standard HailoRT plugin, in the ALPR application, we also use additional GStreamer plugins that are released with the TAPPAS package – Hailo GStreamer tools (libgsthailotools).

- Tracking: this GStreamer plugin implements a Kalman Filter tracker and is responsible for tracking a general object in an image. It receives updates for each operation of the detection network and can associate objects from past frames to assign them a unique ID across frames. The tracker is also able to generate predictions for the location of the objects in unseen frames.

- Quality estimation: this plugin can estimate the quality of an image by calculating the variance of its edges. It receives an input image and calculates its blurriness (score).

- Crop & Resize: this plugin can generate different crops of an image by specific locations. It receives an image and a series of ROIs (Regions of Interest or boxes) and generates several images of fixed size.

- Hailo Filter: a general plugin that allows you to embed C++ code into the pipeline. For example, postprocessing functionality.

- Hailo overlay: to draw the final output of the application, we use a specialized plugin that aggregates all the predictions, draws the bounding boxes and metadata, and generates the final output frame.

Performance

The following table summarizes the performance of the ALPR application on Hailo-8 and i.MX 8 with USB Camera in FHD input resolution (1920×1080) as well as breakdown for the neural networks standalone performance.

| FPS | Latency | Accuracy | |

|---|---|---|---|

Full Application (x86) |

60 |

99.86 |

|

Full Application (i.MX) |

60 |

99.86% |

|

Standalone Vehicle Detection |

80 |

1.39 ms |

46.059AP |

Standalone License Plate Detection |

1299.8 |

44.37 ms |

74.083AP |

Standalone LPRNet |

303 |

5.81 ms |

99.86% |

Figure 7 – Performance of the ALPR application running on Hailo-8

The Hailo AI ALPR solution

The Hailo ALPR solution provides a complete and versatile end-to-end framework for deploying AI-powered intelligent transportation applications on the edge. It includes the entire application pipeline deployed in GStreamer with Hailo TAPPAS and re-training capabilities of each neural network to enable customization with the Hailo Model Zoo. This automotive AI application serves as a robust foundation for development of an APLR product with Hailo-8. A similar pipeline can be implemented on the Hailo-15. For more information and access to Hailo models, check out the Hailo TAPPAS GitHub repository. To share your Hailo-based LPR project, or consult with our community and experts on the best ways to deploy LPR in your system, go to community.hailo.ai

This work is a collaboration by Tamir Tapuhi, Nadiv Dharan, Gilad Nahor, Rotem Bar, Itai Ofir, Yuval Belzer and Yuval Bernstein

Bibliography

Bochkovskiy, A., Wang, C.-Y., Hong-Yuan, & Liao, M. (2020). YOLOv4: Optimal Speed and Accuracy of Object Detection. Tech Report.

Laroca, R., Zanlorensi, L. A., Gonçalves, G. R., Todt, E., Schwartz, W. R., & Menotti, D. (2019). An Efficient and Layout-Independent Automatic License Plate Recognition System Based on the YOLO detector. IET Intelligent Transport Systems.

Silva, S. M., & Jung, C. R. (2018). License Plate Detection and Recognition in Unconstrained Scenarios. ECCV.

Zherzdev, S., & Gruzdev, A. (2018). LPRNet: License Plate Recognition via Deep Neural Networks. arXiv.