Video Management Systems —

a growing market with growing demands

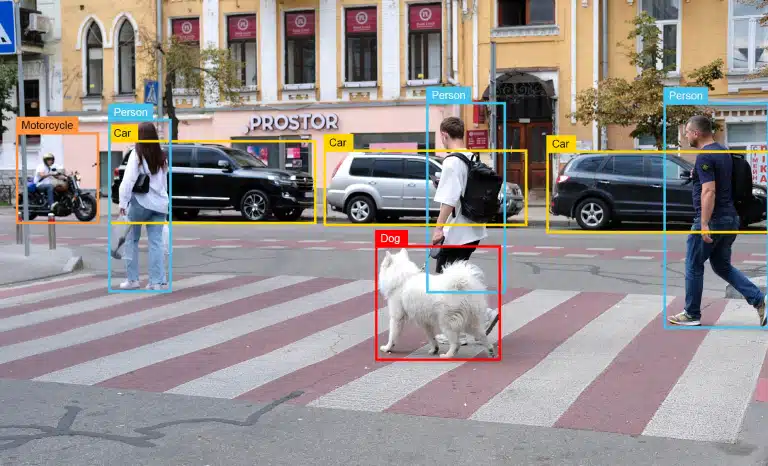

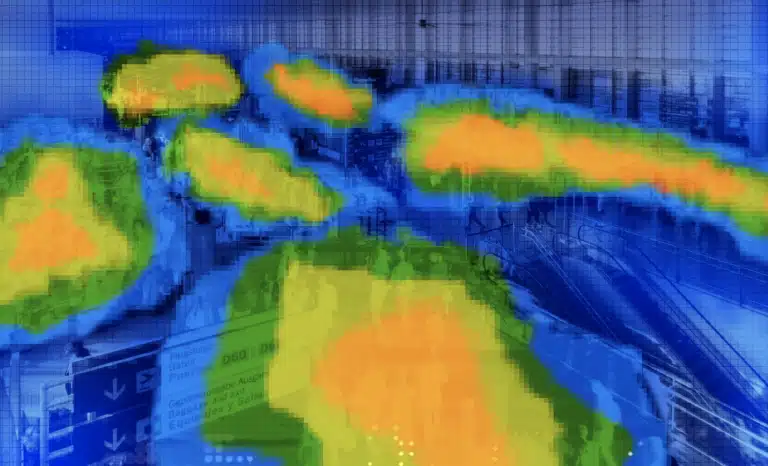

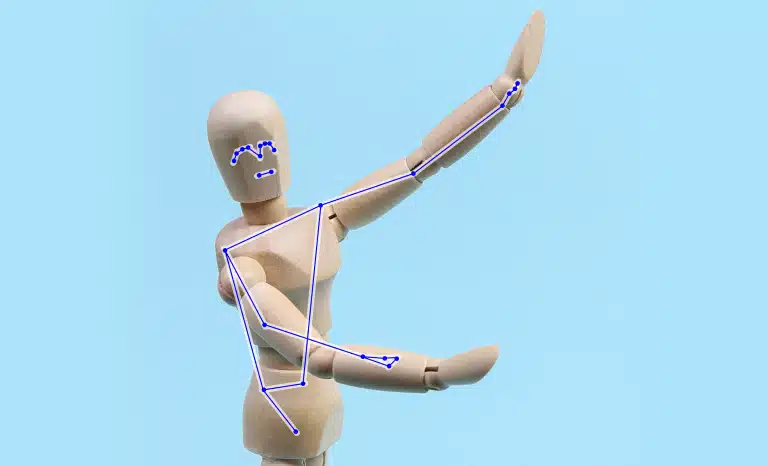

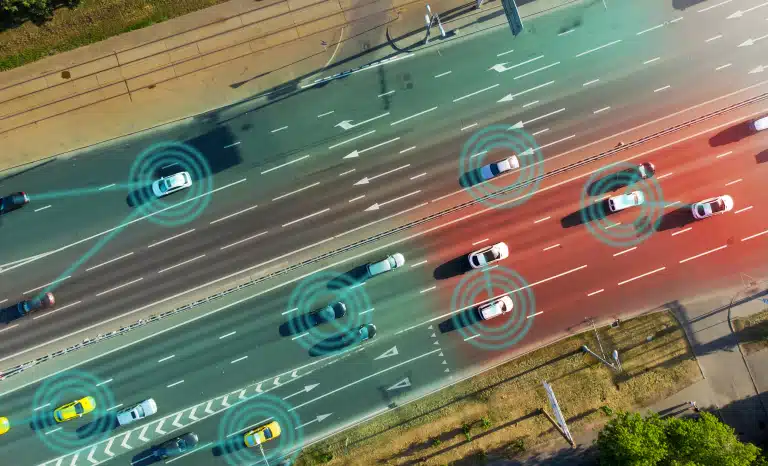

A Video Management System, also known as VMS, serves as a global term for all the software elements that are involved in handling multiple video channels at scale. The system collects inputs from a number of cameras and other sensors and addresses all related aspects of video handling, such as storage, retrieval, analysis and display. Video analytics is increasingly becoming an important part of the VMS, enabling advanced capabilities that are applied to multiple streams concurrently.

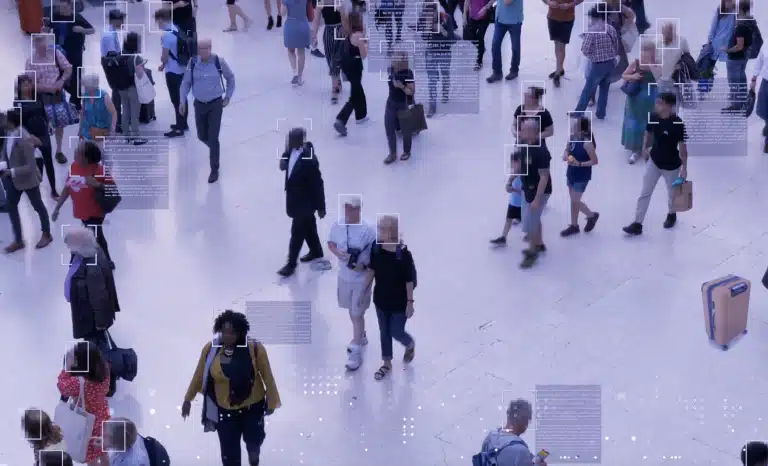

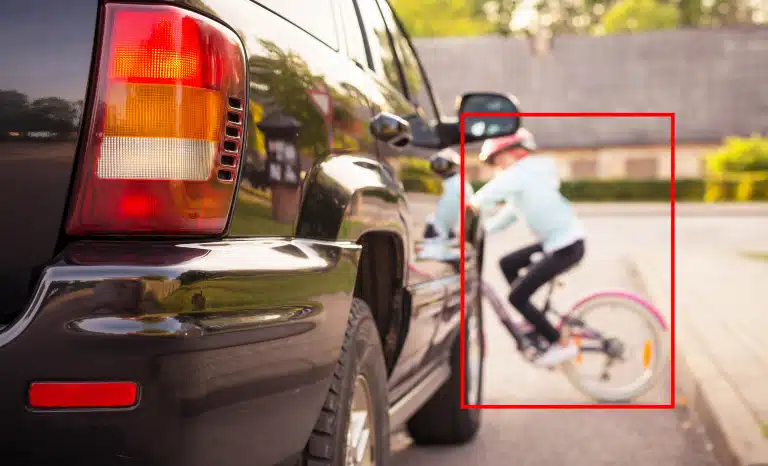

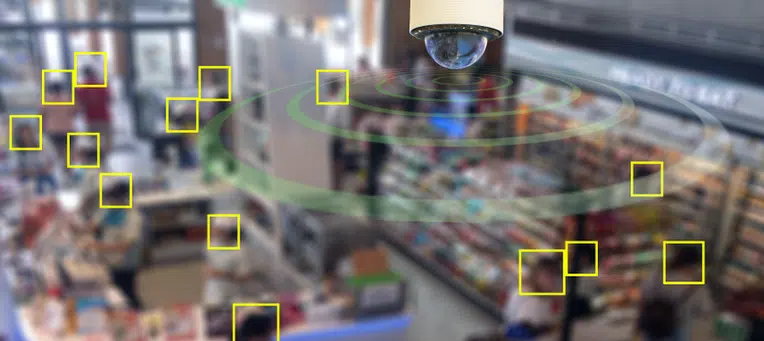

VMS systems are used in different contexts. Most commonly, VMS is used for security and surveillance, enhancing personal safety while maintaining individual privacy. Another typical use case is extraction of business intelligence from user behavior analysis for the purpose of customer experience improvement in retail and other industries.

Traditionally, the analysis of live video streams used to be manual, relying on human perception for visual identification of events happening in each feed. This method has many disadvantages as it is not scalable, and prone to errors caused by operator’s fatigue, leading to false alarms, missed occurrences, and wasted time and money.

Nowadays, deep learning is enabling the automation of the analytics task, thereby allowing for easier scalability, and improvement in overall performance. This eventually leads to lower total cost of ownership (TCO).

According to a recent market research, the video management system market size is expected to reach $31B by 2027, growing at a Compound Annual Growth Rate (CAGR) of 23.1% in the next 5 years. The key drivers for this growth are increasing security concerns in parallel to rapid adoption of IP cameras for surveillance and security applications.