Super Resolution: Using Deep Learning to Improve Image Quality and Resolution

On Thursday, May 14, 1948, David Ben-Gurion stood in front of a national assembly and the entire world and declared the formation of the sovereign State of Israel, of which he would be the first Prime Minister. This moment of proud celebration and tremendous hope was the culmination of centuries of troubled Jewish history and many years of work by political and social thinkers and activists. Last week, as Israel celebrated 73 years of independence with fireworks, music concerts and barbecues, Hailo was fortunate enough to contribute to the revival of this landmark moment. Collaborating with Jerusalem Cinematheque Israel Film Archive and Israel State Archives, who had compiled and digitized the historical footage, we were able to increase image resolution with the use of deep learning and color of the iconic footage of Ben-Gurion’s declaration speech.

To restore color, we used several colorization iterations with the Deep-Exemplar-Based Video Colorization model [1], that uses reference images and colorizes video footage with emphasis on consistency between consecutive frames. Later, we enhanced the resolution of the colorized footage with a Super Resolution (SR) neural network, running on the Hailo-8TM AI Processor chip.

What Is Super Resolution?

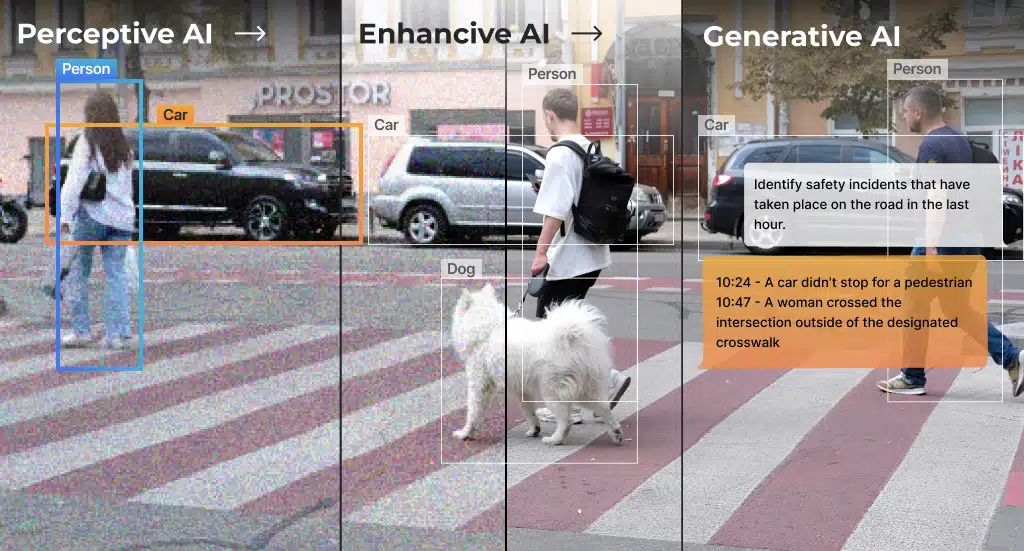

Super Resolution (SR) is the recovery of high-resolution details from a low-resolution input. This task is a part of an important segment of image processing that addresses image enhancement and also includes such tasks as denoising, dehazing, de-aliasing and colorization. In some cases, the image was originally taken at low resolution and the aim is to improve its quality. In others, a high-resolution image was downsampled (to save storage space or transmission bandwidth), and the aim is to retrieve its original quality for viewing.

Super Resolution Applications

Super Resolution is used to improve imagery quality and resolution with deep learning across many professional domains and verticals (such as medical imaging and life sciences, climatology and agriculture, to name a few [2, 3]), but it is also increasingly finding applications in consumer edge devices. It is already widely used to improve image quality produced by high-crop factor sensors in smartphones and high-end digital cameras, as well as to achieve image quality in state-of-the-art television devices, where it performs detail creation and AI-based noise reduction, among others.

But what if your television could take your old family movies and turn them into high-resolution, living color video that would be closer to what you have seen and remember but the camera of the time could not capture. It seems that digitalizing old photos and videos was a bit of trend in the stay-at-home order periods of the pandemic [4].

Empowered with AI processing at the edge and running AI Super Resolution neural models, your television can take a lower resolution input and turn it to the FHD or 4K image on your screen. This could save you and your provider precious bandwidth. Imagine that your favorite movie’s quality is not downgraded by the system because your home network bandwidth is reaching its limit or due to their regional servers being overloaded. Imagine that the outdated communications infrastructure in your area (which is not slated for an upgrade anytime soon…) is not limiting your ability to use that 4K or 8K television you bought. All because your television device comes with this amazing edge AI capability built into it.

This is not just a matter of customer experience, but a means of generating system-level efficiencies and cost-savings for communications providers and content platforms. These can transmit lower resolution inputs to homes, freeing up bandwidth across the network, while still proving high-quality, crisp images even on higher resolution screens. This is especially important in light of the growing demand for bandwidth, partially propelled by the demand for high-resolution video.

For enterprises, SR can be implemented in employee laptops and dedicated boardroom equipment to significantly enhance video conferencing quality. The notoriously finicky connection quality that cuts out audio or makes people freeze or lag on your screen can be abated, if not solved, by reducing bandwidth requirements and not overloading the office network.

Interestingly, some commercial and public safety applications apply SR selectively – not to the entire video frame but to certain parts or elements in the image. For example, it can enhance OCR (Optical Character Recognition) in poor quality images by detecting and enhancing only the text in them. As you can probably tell, edge applications for this are endless – from license plate recognition in car parks to high-quality document scanning [5, 6].

Super Resolution Methods

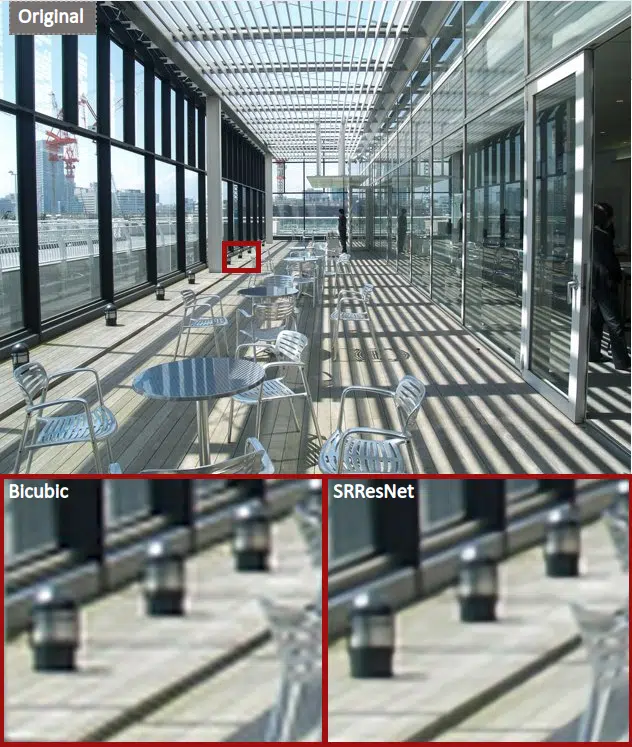

There are well-established interpolation-based algorithms for expanding the spatial dimension of images. While these algorithms are fast, their performance often results in noticeable artifacts and distortions. Deep learning provides a new approach to recovering information from images thanks to its ability to learn scene-dependent image reconstruction. For example, spatial aliasing (distortions due to insufficient sampling frequency) occurs when thin lines and edges are sampled onto the pixel-grid in the low resolution image. Non-trainable methods expand this visually unattractive distortion along with the image and may even amplify it, whereas deep-learning-based models learn to remove the distortion (see Fig. 2). Current deep SR models successfully upscale images up to X4 [7].

[tb-dynamic-container provider=“ source=“ field=“ removeDeadLinkTarget=“true“]

Fig. 2. Bicubic upscaling vs. the SR model used for the video, shown on an image from the Urban100 test set (upscale factor X4)

SR at the Edge

Unlike many computer vision AI tasks like object detection, deep SR models increase rather than reduce the spatial dimensions of the data as it is being processed. Both the required compute and activation memory scale linearly with the number of pixels being processed. This makes SR more compute- and memory-intensive compared to other tasks. For example, state-of-the-art SR networks can take 20 seconds on high-end GPUs to perform X4 up-scaling of a 2K image [9]. Edge SR applications (e.g., TV, gaming, conference) usually require real-time, or close to real-time operation on power-constrained devices. Although SR architectures are constantly improving in compute-efficiency they remain very computationally heavy.

The combination of real-time requirements and the inherent compute and memory-intense nature of SR models, creates a set of constraints that is not easy to solve. The following are a few key parameters to consider in that regard:

- Compute – the requirement to keep the spatial size large increases the number of compute operations per layer as the number of features increases. When deciding the compression ratio of images and the required end-result, it is useful to note that the speed of pre-upsampling networks depends mainly on the output size, while the speed of post-upsampling networks depends on the input size [8], and is therefore more practical in large models.

- Memory – SR models tend to use spatially large convolution kernels, which require more memory. Furthermore, all modern SR models use skip connections to increase accuracy. The longer the skip, the larger the buffering needed before merging data from the two paths can be completed. This challenge is amplified with long skip connections and dense architectures, where the required activation memory is large.

- Patches – apart from choosing an optimal SR architecture, a complementary approach to reducing the memory footprint is to process the low resolution image in patches and stitch the SR predictions back up. This is possible since unlike other vision tasks (e.g., detection), SR does not require large visual context.

Although challenging, running AI Super Resolution on the edge is considered feasible nowadays. Recent developments in AI accelerators with high compute capabilities and power efficiency make it possible and make available new products with AI capabilities.

In Conclusion

Deep learning is allowing us to boost image recovery and resolution enhancement in a way that traditional vision processing techniques were unable to do. Though generally more compute-intense than other AI-based vision tasks due to the large spatial dimension of the data, SR can run at the edge and do so in real time. All it needs is a high-compute AI processor.

SR at the edge can be a great solution for private, public and commercial image and video reconstruction needs. As we have seen above, it can now bring history just a little closer to us. Consumers and businesses, not to mention historical archives like the Israel Film Archive, are far from being the only beneficiaries of this technology.

And above all – who would not want to look sharp?

[tb-dynamic-container provider=“ source=“ field=“ removeDeadLinkTarget=“true“]

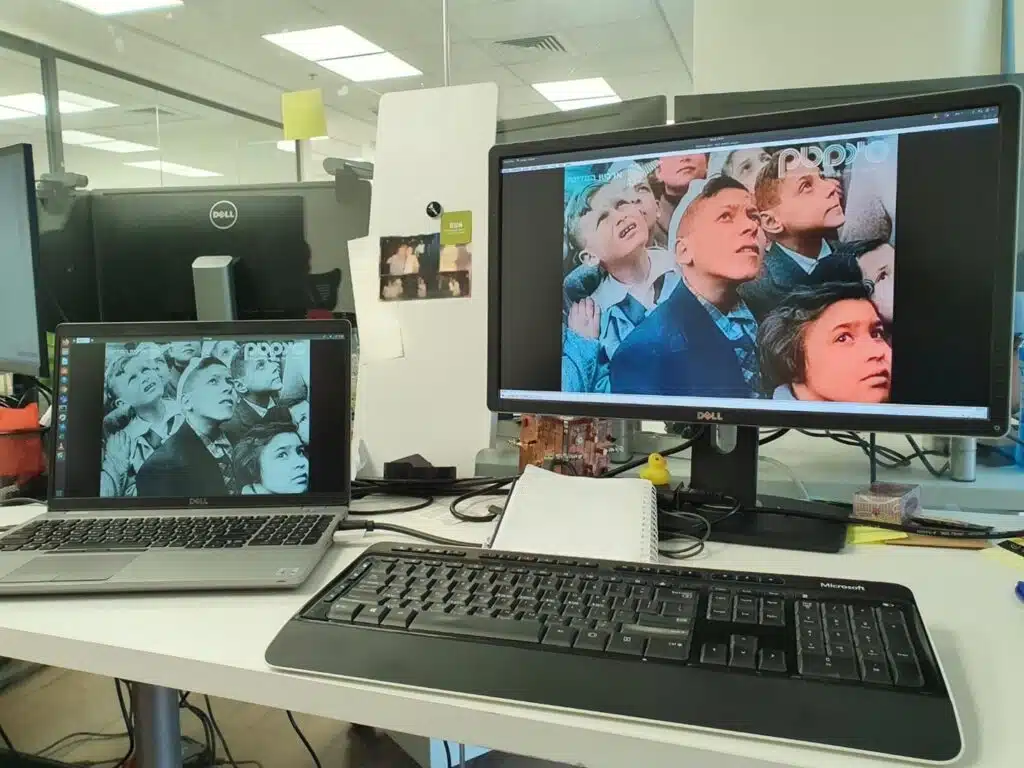

Fig. 3. Working on the reconstruction at the Hailo offices

Sources & Comments:

- Zhang et al. Deep Exemplar-based Video Colorization. arXiv. 2019

- Strengel et al. Adversarial super-resolution of climatological wind and solar data. PNAS July 2020

- Oz et al. Rapid super resolution for infrared imagery. Optic Express. Vol. 28 2020

- EverPresent. Interest In Digitizing Soars During COVID-19 Stay-at-Home Orders. June 2020

- Dong et al. Boosting Optical Character Recognition: A Super-Resolution Approach. arXiv. 2015

- Robert et al. Does Super-Resolution Improve OCR Performance in the Real World? A Case Study on Images of Receipts. ICIP. Oct 2020

- Anwar et al. A Deep Journey into Super-resolution: A Survey. ACM Computing Surveys 2020.

- Dong et al. Accelerating the Super-Resolution Convolutional Neural Network. ECCV 2016

- Wang et al. Deep Learning for Image Super-resolution: A Survey. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2020

Read mode about what we do

Hailo offers breakthrough AI accelerators and Vision processors